How do you know if a finding in social science is, well, real?

Students of science are taught not to take the results of a single study as the absolute truth. The rule of thumb is to seek out systematic reviews, or meta-analyses, which pull together lots of studies looking at the same question and weigh their rigor to come to a more fully supported conclusion.

But can we trust all this meta-science? Not necessarily. Positive, confirmatory findings often make their way into the published literature, while negative findings collect dust in file drawers. This means meta-analyses, too, can be biased.

Adding to the confusion is the fact that in recent years, rigorous retesting of social science theories, like the willpower theory of ego depletion, has come crashing down. The resulting “replication crisis” had led many scientists to wonder: How many findings in psychological science would hold up in rigorous retesting?

They’ve been answering that question by embarking on large-scale replication efforts. But wouldn’t it be helpful — for deciding which ideas are worth funding, for deciding which ideas are worth publicizing — to be able to predict the answer without investing the time and resources to retest an experiment?

Perhaps a machine-learning-derived computer program (a form of AI) that could rapidly assess and predict the reliably of a scientific finding would be helpful.

As it turns out, DARPA (the military’s Defense Advanced Research Projects Agency) is trying to build one. The project is called SCORE (Systematizing Confidence in Open Research and Evidence), and it’s a collaboration with the Center for Open Science in Virginia, with a price tag that may top $7.6 million over three years.

It’s potentially a really cool and useful idea. But it’s not guaranteed to work.

Why does the military care about the replication crisis?

First off, you might wonder why the Pentagon care about predicting social science study results.

The military is interested in the replicability of social science because it’s interested in people. Soldiers, after all, are people, and lessons from human psychology — lessons in cooperation, conflict, and fatigue — ought to apply to them. And right now it’s hard to assess “what evidence is credible, and how credible it is,” says Brian Nosek, the University of Virginia psychologist who runs the Center for Open Science. “The current research literature isn’t something [the military] can easily use or apply.”

A computer program that quickly scores a social science finding for its potential to replicate would be useful in lots of other areas too, like science funding agencies trying to decide what’s worth spending money on, policymakers who want the best research to inform their ideas, and the general public curious to better understand themselves and their minds. Science journalism would benefit as well: adding an additional sniff test to avoid hyped-up findings.

Assessing the quality of science is tricky, often unsystematic, and filled with potential for bias. It’s often not enough to judge a finding based on the journals it was published in (prestigious journals certainly endure replication failures and retractions), or which university it came from (look no further than the Cornell Food and Brand Lab for a cautionary tale against that), or whether results were “statistically significant” (an extremely fraught metric to rate the quality of science).

This computer program sounds really useful. Could it actually work?

The idea is that with machine learning, a type of artificial intelligence, a computer can look for patterns among papers that failed to replicate and then use those patterns to predict the likelihood of future failures. If scientists saw a psychological theory was predicted to fail, they might take a harder look at their methods and question their results. They might be inspired to try harder, to retest the idea more rigorously, or give up on it completely. Better yet, the program could potentially help them figure out why their results are unlikely to replicate, and use that knowledge to build better methods.

So where would it start? The Center for Open Science is currently embarking on an enormous task to create a database of findings for the computer programs to crawl through. It’s also facilitating rigorous replication projects to past findings, which will add more data to the mix and, eventually, allow for DARPA’s computer program to validate its predictive ability.

“The fundamental question is can we build an algorithm that will help us better understand the amount of confidence we should have in a published article, or a particular claim in the research,” says Adam Russell, the DARPA scientist (an anthropologist by training) in charge of the program.

Nosek and Russell say they can’t be sure such a program would work. But they have a hunch it could. And that’s because humans have been able to make some accurate predictions about replicability.

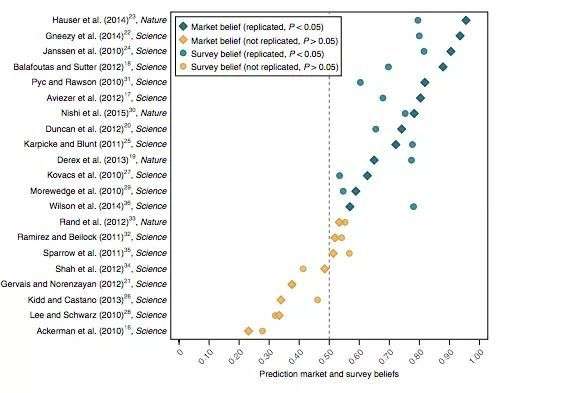

Recently, a team of social scientists — including Nosek, and spanning psychologists and economists — attempted to replicate 21 findings published in the most prestigious general science journals: Nature and Science. The big finding, published in Nature Human Behavior, was that 13 of the 21 results replicated. That’s something other efforts have found too: Around half of social science studies, when retested, replicate. And the ones that do replicate tend to have less impressive findings the second time around.

But perhaps more interesting is that the effort included a test to see whether a group of scientists could predict, via placing bets, which studies would replicate or not. The bets largely tracked with the final results.

As you can see in the chart below, the yellow dots are the studies that did not replicate, and they were all unfavorably ranked by the prediction market survey.

“These results suggest [there’s] something systematic about papers that fail to replicate,” Anna Dreber, a Stockholm-based economist and one of the study co-authors, said after the study’s release. And it’s not just about methods. Some of the papers that failed to replicate fall into the category of “too good to be true,” like an experiment that found that act of washing hands also psychologically relieves people of a common memory bias.

It’s possible that whatever intuition the scientists were using to place their bets could be replicated, and improved upon, with machine learning.

A machine could potentially pick up on patterns not as readily perceptible to humans. It could also make predictions faster and at less cost. “Running a prediction market for every single finding that comes out is not very feasible,” Nosek says.

How might it fail?

The first thing a computer program will have to prove is that it’s as good — or better — at making predictions as the human experts.

But even if it can, the approach is also a bit fraught. When AI is brought to predict things in the real world, it can often just reinforce the status quo, perpetuate bias. What if DARPA’s computer program picks up on — hypothetically speaking — that research from authors of a certain marginalized demographic group is less likely to replicate? Perhaps these people just haven’t been awarded the resources they need to conduct sound science.

“I think it’s wise to still always have a final sanity check by a human if you want to draw conclusions about an individual paper,” Michèle Nuijten, a professor of methodology at Tilburg University who helped create a (much simpler) automated system for checking math errors in academic papers, says in an email. “An automated tool would simply facilitate this process by flagging potential problems (small sample size, a set of p-values that is just significant, etc.)”

The system will have to be transparent, in the sense that not only will it make predictions, but it has to tell researchers how it got there. In that light, it could shine light on previously unappreciated flaws and biases.

A bigger goal of recent efforts to assess the replicability of social science is not just to figure out which theories are wrong. It’s to figure out the components of good science. AI, if used well, could help scientists figure that out.

“It’s entirely possible that the tools themselves will be road maps for how to improve research and, in some cases, identify new biases — something we didn’t know about the science research ecology,” Russell, the DARPA scientist, says. “This is not about making algorithms that will replace peer reviewers, that will replace humans; it’s about how do we bring humans and machines together?”

And another question will remain: Even if DARPA can build this tool, will people feel comfortable taking recommendations from it? That’s a question many fields are grappling with as they introduce more AI into their methods.

“It’s very natural to feel some degree of trepidation of giving ourselves up to the machines,” Nosek says. But he doesn’t want to use them in a way to eliminate human bias in science. “I don’t think we need to get rid of bias; I don’t think that is actually possible. What I want to do is expose it,” Nosek says. And if AI can help, that’s a good thing.

Sourse: vox.com