Instagram announced on Wednesday, in a short company blog post, that it would make the app more accessible to the visually impaired.

“With more than 285 million people in the world who have visual impairments, we know there are many people who could benefit from a more accessible Instagram,” the company wrote, before describing the new functionality: alternative text descriptions for photos in the Feed and the Explore sections of the app, which would allow screen-reading software to automatically describe photos out loud to users.

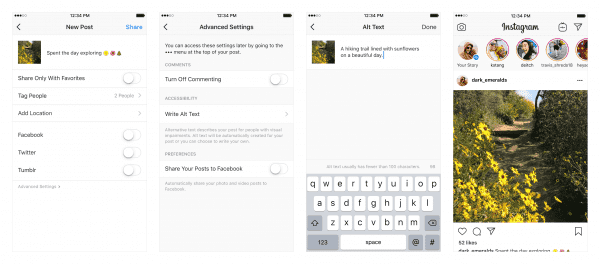

Users can manually add the descriptions in the advanced settings of their posts, only a slight tweak from what many accessibility-conscious users were doing already — putting more detailed or literal descriptions of their photos directly in the image’s caption. More importantly, Instagram will use its object recognition capabilities to automatically generate descriptions for images that the poster doesn’t provide them for.

Responses to the addition were generally warm, with the company receiving thanks from visually impaired users in reply to the announcement tweet. But the change was also widely discussed on Twitter as merely a start, particularly in the #a11y hashtag — a shorthand for an online accessibility movement called the A11Y Project, which shares open source accessibility-friendly software information on GitHub and operates a blog about design and internet community.

The Royal National Institute of Blind People, a British nonprofit, commended Instagram, tweeting congrats on the company’s commitment to accessibility, but also asked that the feature be extended to Stories — important, considering 40 percent of the platform’s 1 billion users post to their Story every day. Louise Taylor, a software engineer at the BBC, tweeted that the feature would perhaps be slightly more usable “if the option to add alt text was easier to find than by clicking on a button with very questionable contrast.”

Others were slightly confused as to why the addition took so long, reacting with a friendly “finally.”

Another thing that’s a little odd here: Instagram’s object recognition capabilities seemingly would have allowed this feature to exist for some time.

The story of how Instagram tweaks its algorithm, identifying content that’s most relevant or interesting to its users, has always been a little opaque, but we know object recognition is at least one part of the puzzle. Facebook has been using Instagram’s wealth of public photos to train its machine learning models, as revealed by chief technology officer Mike Schroepfer at the company’s annual developer conference in May. He didn’t say how long the company had been doing this, but he did say it had already processed 3.5 billion images from Instagram, and that the company had “produced state-of-the-art results that are 1 to 2 percent better than any other system on the ImageNet benchmark.” (ImageNet is the online visual database most commonly used for object recognition software testing.)

Object recognition is obviously a priority for Instagram and its parent company — it will be useful for their advertising business if it isn’t already, and their moderation protocols definitely demand that it be very good.

Facebook, for its part, has had artificial intelligence-generated alternative text as an accessibility feature since April 2016, and allowed users to manually input text for some time before that. Even setting aside the challenges of AI, manual input options for alternative text are more or less standard across the web, and Twitter added them in 2016. If it took this long for Instagram to tack a new text input box onto its app, it’s kind of hard to believe that’s because it was technologically difficult, and not because it was an afterthought.

Reached for comment, an Instagram spokesperson said accessibility features have been “an ongoing project for some time,” and that they were unable to be more specific about the timeline.

“The challenge was ensuring that we could provide valuable descriptions at scale,” the spokesperson said. Essentially: It’s not that the system can’t recognize what’s in the photo, it’s that it struggles to determine what’s important about it, and to establish context.

I was also directed to a research paper Facebook published last February, explaining the problems it had dealt with when it built the automatic alternative text feature for the Newsfeed, and how the feature had been received in its first 10 months in use:

Fair! However, when I asked why it took two and a half years for the Facebook feature to be tweaked to work for Instagram, the representative responded simply that Instagram is “predominantly visual,” and that investments in accessibility take time. This is an important change that deserves celebration; it’s also a change that took two and a half years.

Sourse: vox.com