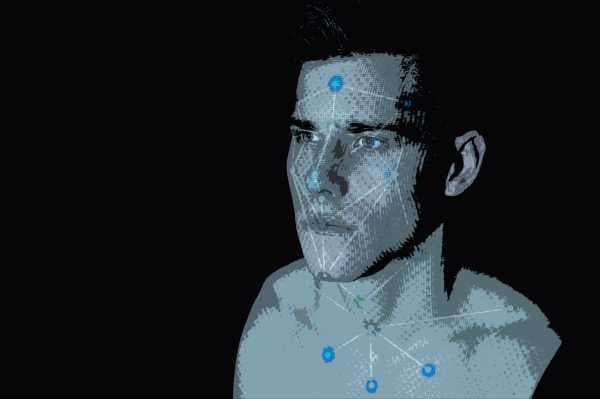

In September, Stanford researcher Michal Kosinski published a preprint of a paper that made an outlandish claim: The profile pictures we upload to social media and dating websites can be used to predict our sexual orientation.

Kosinski, a Polish psychologist who studies human behavior from the footprints we leave online, has a track record of eyebrow-raising results. In 2013, he co-authored a paper that found that people’s Facebook “likes” could be used to predict personal characteristics like personality traits (a finding that reportedly inspired the conservative data firm Cambridge Analytica).

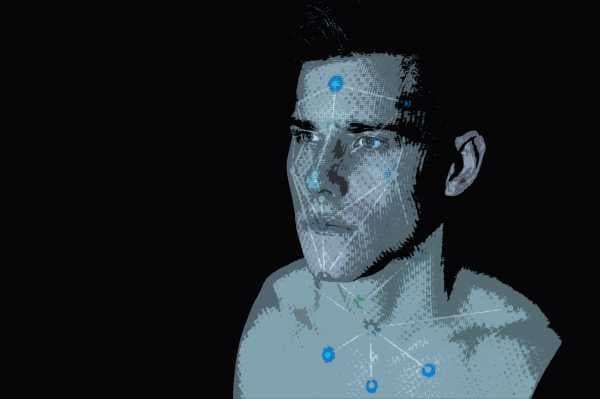

For the new paper, Kosinski built a program with his co-author Yilun Wang using a common artificial intelligence program to scan more than 30,000 photos uploaded to an unnamed dating site. The software’s job? To figure out a pattern about what could distinguish a gay person’s face from a straight person’s.

When choosing between a pair of photos, the resulting program accurately identified a gay man 81 percent of the time and a gay woman 71 percent of the time. The researchers also asked humans to do the same task — and they fared worse, guessing correctly 54 to 61 percent of the time.

More controversially, Kosinski and Wang’s paper claimed that the program based its decision on differences in facial structure; that gay men’s faces were more feminine and lesbian women’s faces were more masculine. They suggested this finding was in line with the prenatal hormone theory of sexual orientation, which suggests our sexuality is, in part, determined by hormone exposure in the womb. This conclusion is hotly contested by some of their colleagues, who say the research is confounded by the fact that the photos were uploaded by the users themselves and not taken in a neutral lab setting. (Read a thorough critique of those conclusions here).

But even if the algorithm is just picking up on personal choices, the result feels unsettling, like the first stone on a path to a Black Mirror future.

LGBTQ rights groups decried the research, saying it’s based in pseudoscience and poses a danger to members of the LGBTQ community around the world. Other researchers in psychology decried it as physiognomy, the long-defunct pseudoscience of attributing personality traits to physical characteristics. The paper is expected to run in a February edition of Personality and Social Psychology.

Kosinski insists his intent was never to out anyone, but rather to warn us about the rapid extinction of privacy.

And sexual orientation, he argues, is a start. Many more aspects of our inner lives — like personality traits — may be encoded in our faces. This is also a controversial idea, which harks back to the pseudoscience of physiognomy.

When I spoke to Kosinski in November at his Stanford office, I was most interested in learning why he’s asking these controversial research questions, and whether his “warning” of a loss a privacy is actually just a blueprint to achieve it. He argues these technologies are already being used and have a huge potential for misuse.

Industries, governments, and even psychologists can mine the digital footprints we leave everywhere and learn things about ourselves we wouldn’t necessarily be willing to share. “Basically, going forward, there’s going to be no privacy whatsoever,” he says.

This conversation has been edited for length and clarity.

Kosinski wants to study human behavior via digital footprints

Brian Resnick

You’ve become pretty famous in the past few years. I was first familiar with your work on deriving personality profiles from Facebook. [This is the work that would inspire Cambridge Analytica.]

But that’s a whole other story than the “gaydar” machine.

Michal Kosinski

It’s exactly the same story.

Brian Resnick

Really. How so?

Michal Kosinski

My overarching goal is to try to understand people, social processes, and behavior better through the lens of digital footprints.

It proves pretty difficult to study human behavior and psychology using big data. But there’s huge promise there. What’s interesting are the tiny details that we may actually notice in big data, and the effect might be tiny.

You can very easily create a “black box” that would take some of this digital footprint data and produce very accurate predictions of future behavior and psychological traits. By studying what’s inside this black box, we may understand behavior better. Even without studying the black box, we already know that the computer, in its own computer’s way, understood the links.

Brian Resnick

By “black box,” you mean artificial intelligence and neural networks. That’s where computers are trained to make predictions off data, but you, the researcher, don’t know how it’s making the prediction. That prediction might be interesting, but it doesn’t always help you hypothesize about what’s really going on.

Michal Kosinski

Exactly.

It proves to be uncomfortably accurate at making predictions.

We know that companies are already collecting this data and using such black boxes to predict future behavior. Google, Facebook, and Netflix are doing this.

Basically, most of the modern platforms are just virtually based on recording digital footprints and predicting future behavior.

Why computers may be better at reading faces than humans

Brian Resnick

So for this project, the digital footprint you studied was profile pictures: 35,000-plus photos obtained from a dating website. Why did you want to study faces?

Michal Kosinski

A few reasons.

First of all, maybe only five years ago, computer technology reached the stage at which you can run [these] analyses on your computer or laptop, as opposed to needing a supercomputer.

Before, we just couldn’t really do it. We could study faces, but we would have to use very inaccurate measures. We had people judging the faces.

Brian Resnick

Okay. But why did you think the computer could correlate facial attributes with personal characteristics?

Michal Kosinski

People can predict intimate traits of others from the faces. Gender is an example. Emotion, age, Down syndrome, developmental disease. There’s a huge range of things we can detect from people’s faces.

Someone told me in the response to my recent paper that “extraordinary claims require extraordinary evidence.” What strikes me is it’s extraordinary to claim there is no sign of sexual orientation on your face, because so many other things — gender, age, and so on — leave signs on human faces.

Psychologists would say, “Oh, yes, that’s true, but not personality. This is just pseudoscience.” I’m like, wait. You can accept that you can predict 57 things, but if I say, “What about 58?” you say, “This is absolutely theoretically impossible. This is pseudoscience. How can you even say that?”

Brian Resnick

People aren’t great at intuiting sexual orientation from just looking at a person’s face. Why would a neural network do better?

Michal Kosinski

Wherever we try to apply neural nets to do something that humans were doing before, with very little tinkering you can get results that are better than what humans can do. This is amazing — in many cases, like self-driving cars, they are already safer than humans. Planes land themselves. We have all of those AI-based tools around us that make our lives better.

We have many years of evidence that humans judge personality, intelligence, and political views from people faces with little accuracy.

Why? Maybe because people cannot perceive [these things]. [For instance,] we focus on the wrinkles around your eyes because this is useful in judging emotion. But we don’t focus on wrinkles elsewhere. [Meaning there may be some clues to a person’s sexuality or personality in the face that we just don’t pay attention to.]

The second is that maybe people, even if they perceive, they cannot interpret. [That is, we see the signs on a person’s face that relate to personality or sexual orientation, but we don’t connect the dots.] Our neural networks might be trained better to judge.

“Can you please check how threatening this bomb is?”

Brian Resnick

Of all of the personal traits or characteristics to study, why did you choose sexual orientation for this paper? It was sure to be controversial.

Michal Kosinski

Because it’s most urgent. I already ran studies on political views, personality, IQ, and a bunch of other things. Those other things, they’re interesting. They’re potentially important. But when it comes to sexual orientation, it’s super urgent.

If you asked me, “Hey, Michal, why don’t you become a scientist to build explosives to build a new bigger bomb?” I would be like, “I don’t think people should be doing that.”

Now, if you asked me, “We already have this bomb. We have these algorithms. Can you please check how threatening this bomb is?” Which is essentially what I tried to do. I think this is a really important aspect because we already have the danger. We have the risk, and we try to find ways to neutralize it.

A smart person with a computer and access to the internet can judge sexual orientation of anyone in the world, or millions of people simultaneously with very little effort, which makes lives of homophobes and oppressive regimes just a tiny bit more easy.

Brian Resnick

You say that this research is, in part, a warning about our loss of privacy. But then, aren’t you giving the world a blueprint to invade people’s privacy?

[Note: I should mention, the “gaydar” machine Kosinski created isn’t all that practical to use in the real world. The algorithm was tested by analyzing pairs of photos, one of a straight person and one of a gay person. The computer had to make a guess about who was gay. It chose correctly 81 percent of the time. This is not the same task as picking seven gay men out of a lineup of 100.]

Michal Kosinski

I’m taking an existing weapon [AI and facial recognition], and I’m saying it’s already being used.

Brian Resnick

Who is using it?

Michal Kosinski

Governments are already doing this in practice.

[Note: There’s one startup called Faception that claims it can detect terrorists or pedophiles just by looking at faces, and says it has contracts with governments. It has been reported that Kosinski was an adviser to Faception. He says this is a mischaracterization, and that he has accepted no money from them. He says, “I’m happy to give advice,” and, “Look, if you study how chemicals are dangerous, you should be advising companies producing chemicals.”]

Many fear AI will just be used to discriminate under the guise of objectivity

Brian Resnick

There’s a fear with AI that these networks are really just learning stereotypes.

With the photos of people’s sexual orientation, in your paper you noted these are people’s self-selected photos. You didn’t take 30,000 people and bring them into a lab and take perfectly neutral photos of them. So your program could be picking up on things other than face shape, like makeup or grooming. That it’s not picking up on something biological, as your paper claims, but it’s picking up on choices.

Michal Kosinski

The whole point of this paper is that you don’t need to bring people to the lab. You can just take their pictures from Facebook or from a dating website, and the prediction is possible.

The whole idea of machine learning is that you can train it on the original sample, and then as the machine works, it will just start matching patterns and noticing patterns and enriching the model.

I expect that if you take the model trained on dating websites and apply it to a DMV database of driving license photos, at the beginning, first few thousand, the model will probably struggle. Like learn there is a new pattern of lighting or what not, but this is the idea of machine learning and neural networks. It automatically will adjust. Even without any additional training data, it will improve the accuracy. It learns about the patterns in new images.

Brian Resnick

There was a paper that came out of China where researchers used facial recognition to look at drivers’ license photos and predict who is a criminal. It purported to have an accuracy of 89.5 percent. I’ve talked to some experts about this, and the biggest concern there was this: What was this program picking up on? Could it be physical features of certain ethnic groups that are discriminated against in the justice system?

Michal Kosinski

You can, but stereotypes also might be accurate. It might be that gay people … I don’t know, talk differently. But it may also be true that to some extent, they talk differently. But humans have a problem with judging probability.

The beauty of a computer is it can go beyond the stereotype. Use the stereotype proper. Like, the stereotype developed by a computer will be an accurate one. It’s a data-driven thing.

The point is even if the prediction is not very accurate, you can sort people from most likely to least likely and just focus your efforts at the top. When it comes to, say, finding criminals, like people that want to blow themselves up or kill your grandma, I think there are still problems with using such technology to detect such people, but I’m open to discussing it.

There will be biases. Nothing is perfect in AI.

Brian Resnick

Let’s say you did a machine learning project using police mug shots in the United States.

Wouldn’t a machine learning program learn there’s racial prejudice in our justice system? But it wouldn’t know it’s racial prejudice — it would just begin to associate darker complexions with criminality. And then if you used that machine to predict who is a criminal, all you’d be doing is reinforcing a stereotype.

Michal Kosinski

If you trained the model in a stupid way, then of course. You can make an algorithm as racist as no human being has ever been in the history. You can make a stupid thing, no question about it.

We can train algorithms in such a way as to not be racists, minimize those biases, and, as opposed to humans, we can control for these biases. We can examine how algorithms work.

Also, we have to compare in the algorithms with what we already have.

People say, “Oh, if you use algorithmic hiring, sentencing, or whatnot, there will be biases.” I agree.

There will be biases. Nothing is perfect in AI. It’s not perfect, but you always have to compare it with the status quo.

What is the alternative? The alternative is using humans. Humans are racist, sexist, ageist, corrupt, unfair, get tired, and we know that. We understand this very well.

People think morality is outside the realm of science. Integrity is outside of the realm of science. This, of course, is silly.

Brian Resnick

Can you understand why people were afraid of this?

Michal Kosinski

I understand the gut feeling. I’m not writing this paper with pleasure. It freaks me out that it’s possible. I would love for those results to fail to replicate. It would just mean that we have one problem less as humanity, but give me a chance to sit down and talk you through with someone and actually show them the paper. Have them read the paper and the hate goes away.

I had a number of those experiences, including with people who sent me death threats.

Brian Resnick

What’s the big story here? The takeaway.

Michal Kosinski

The big story is that we’re not going to have any privacy very soon. I’m all for privacy-protecting laws and technologies, but people keep forgetting that they’re just doomed to fail. Equifax had amazing data protecting technologies. If big companies and governments cannot protect their data, how can you believe that you or our readers or I would be able to protect our data? The motivated party is out there to get them. They will get the data, however much they encrypt it.

Also, even if we somehow magically get full control of our data, we still want to share the data. We want to tweet. We want to write stuff on Facebook. We want to walk the streets without covering my face and distorting my voice. This is already enough to make very accurate predictions of my sensitive traits [like personality, politics, wealth]. Basically, going forward, there’s going to be no privacy whatsoever.

Brian Resnick

There’s nothing we can do.

Michal Kosinski

There’s little we can do.

Now we have to sit down, and the sooner we sit down and start thinking about how to make sure that the post-privacy world — or post-privacy world for some people, like for poor, underprivileged people living in non-liberal, non-Western countries, if their world suddenly becomes a post-privacy world — how do we make sure that it’s still safe and habitable place to live?

Sourse: vox.com