This story is part of a group of stories called

Finding the best ways to do good. Made possible by The Rockefeller Foundation.

In a provocative op-ed in the New York Times last week, PayPal and Palantir founder Peter Thiel argued that artificial intelligence is “a military technology.” So, he asks, why are companies like Google and Microsoft, which have opened research labs in China to recruit Chinese researchers for their cutting-edge AI research, “sharing it with a rival”?

Thiel’s op-ed caused a big splash in the AI community and frustrated experts in both AI and US-China relations. An outspoken Trump backer, Thiel has been a leading voice pushing for tech to be more aligned with what he sees as America’s defense interests — and his messages have been influential among conservative intellectuals.

Critics pointed out that Thiel had failed to disclose that his company, Palantir, has defense contracts with the US government totaling more than $1 billion, and that he might benefit from portraying AI as a military technology (a characterization of AI that experts dispute). Other critics noted that he gets basic facts about China wrong, such as that Chinese law “mandates that all research done in China be shared with the People’s Liberation Army,” which is not true at all.

But the op-ed resonated nonetheless. Why? Well, because members of the global AI community are grappling with the powerful technology they’re developing. And when it comes to China, they are grappling in particular with the ways that government has embraced the technology to increase authoritarian control of the population and commit atrocities like the mass internment of Uighurs.

So there are real reasons to be concerned about the progress of AI in China, and to wonder if US companies have made principled decisions about what research they’re conducting and sharing.

But Thiel’s take misses the point — dangerously so. Its focus on condemning all research conducted in China, its insistence that AI is a military technology, and its choice of a Cold War, us-against-them framing will make the situation worse for the development of safe AI systems, not better. And because safe, cautious AI development is one of the biggest challenges ahead of us, those mistakes could be costly.

The state of AI in China

The Chinese government and Chinese companies are tremendously invested in, and intrigued by, AI. In surveys, Chinese CEOs and policy thinkers consistently give AI much more weight than their Western counterparts do.

Many analysts trace China’s AI fascination back to one moment: AlphaGo. In 2016, Google/Alphabet’s DeepMind released an AI system that could beat top pros at the strategy game Go. In much of East Asia, Go is a highly regarded pastime and considered a deeply significant test of skill and strategic thinking.

Analysts have called AlphaGo China’s “Sputnik moment,” comparing how it galvanized Chinese researchers to how Americans were deeply affected by the successful Soviet launch of the world’s first artificial satellite. When AlphaGo crushed human experts, China ramped up its investment in AI.

Despite this increased investment, experts agree that the US and the West are still at the forefront of AI research. More machine learning papers are published in China than in the United States, but the cutting-edge innovations of the machine learning era have come from the West — so far. Many of them have come from Google, either via DeepMind, a London-based AI startup acquired by Google’s parent company Alphabet five years ago, or via Google’s own AI division.

That’s not to say there isn’t a tremendous AI talent pool in China. Many leading American AI researchers are foreign-born. What has given the US its AI advantage has been, in significant part, the fact that the US attracts AI talent from all over the world. While America is a much smaller country than China, it’s drawing on what is effectively a much larger talent pool, including attracting many top Chinese researchers.

But there’s a deep talent pool in China too. And American tech giants from Google to Amazon to Microsoft have opened up research departments in China and elsewhere in Asia in order to attract that talent pool.

“These efforts to create talent bases in other countries could potentially be good for US innovation and expanding our lead in these areas,” Jeffrey Ding, a China AI policy expert at Oxford’s Center for the Governance of AI, told me. “A lot of our technological advantage comes from our ability to adapt. Having these R&D centers in China allows us to not only get the best and brightest from China but also serves as a listening post, an absorption channel of sorts, a way to be kept up to date on the Chinese ecosystem.”

Thiel argues that operating such centers is, effectively, giving away advanced US AI secrets to America’s enemies. While he exaggerates this risk, it isn’t invented — some researchers at Google or Microsoft labs in China go on to work for the Chinese military, taking their expertise with them. Occasionally research is outright leaked, which, while not great, is certainly not reason enough to curtail research.

But the picture Thiel paints is incomplete, Ding says.

For one thing, the talented Chinese researchers who are working right now for US companies wouldn’t go away if US companies stopped hiring them; they’d instead work for other Chinese companies or for the Chinese military. “Either we try to get the best and brightest, or they have other options,” Ding told me. If we’d rather someone work for Microsoft than the Chinese military, why take away the option of working for Microsoft?

For another, the vast majority of the research happening at these labs isn’t of any interest to the Chinese military — it’s work like trying to improve Microsoft’s chatbot or voice recognition. There’s no obvious route for that work to cause problems. After Thiel raised the alarm about Google’s activities earlier this month, the Trump administration looked into it. Treasury Secretary Steve Mnuchin said later, “We’re not aware of any areas where Google is working with the Chinese government in any way that raises concerns.”

Overall, Ding argues, “you’re not going to be able to stop or slow down Chinese AI progress by stopping these labs.”

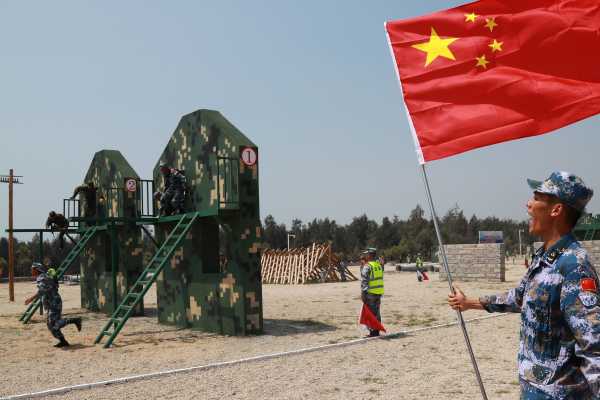

Thiel is right, though, to express grave reservations about China’s use of AI. Recent research found there was a sudden increase in facial recognition research in China’s AI research community shortly before the government began its frightening crackdown on Uighur Muslims, interning more than a million of them, most of whom have not been heard from since. AI researcher or not, we should be sickened by totalitarian abuses like these.

And AI likely will have some military applications, which China, like the US, is no doubt exploring. With many US foreign policy experts concerned about China’s increasing influence in Africa and toward its neighbors — including suspected violent intervention in peaceful protests in Hong Kong — no one finds the idea of increasing Chinese military capabilities appealing. The question is whether combating civilian AI collaborations does anything to stop it.

To do AI right, we need to think about AI accurately

Thiel has a long history of involvement with AI development. But in the editorial, he mischaracterizes the way AI works and the key drivers of AI innovation — and that inaccurate picture makes it harder to address the real problems he mentions.

Even if US companies shut down their research centers in China, it will take much more than that to stop Chinese researchers from using information from recent advances in AI. That’s because lots of AI research is currently published in open access journals online, including enough detail and data to replicate the results of the papers.

Let’s be clear: That’s a good thing. Openness is good for science, and openness in AI has been good for the field. It allows people to try to replicate papers, learn things, test claims in published research, and stay abreast of the field even if they’re not at an elite institution. Ceasing to publish research would be a huge step, and it’s one the community is understandably resistant to.

That said, many AI researchers are increasingly realizing that the heyday of AI openness, where nearly all research is published for anyone in the world to explore, can’t last forever. AI research will probably have to go behind paywalls at some point. AI systems are getting more powerful, and that makes us more vulnerable to malicious misuses. And if, as some experts suspect, artificial general intelligence — an AI system that exceeds human capabilities across many domains — is achievable, then it would have to be developed extremely carefully to avoid catastrophic errors.

As a result, some leading AI labs have explored delays in publishing their research to address security and public interest concerns. As AI grows more powerful, more will join them. There’s absolutely space for a conversation about how to ensure that AI research is transparent, collaborative, and careful when it cannot all be published openly, and about whether policy in China is conducive to cautious progress on advanced technology.

But so far, the way people concerned about China have approached that conversation hasn’t been very productive. “There seems to be this trend to label tech companies who operate research and development labs in China as anti-American,” Ding told me, “and I think that’s dangerous.”

And that’s not the only dangerous element of Thiel’s characterization. Thiel writes that “A.I. is a military technology,” and that “the first users of the machine learning tools being created today will be generals rather than board game strategists.”

If you’ve checked out the tools to make fake faces of people who don’t exist, played Starcraft against a bot, read bot-created poetry, or interacted with a personal assistant like Alexa, Siri, or Google Assistant, then you’re a living disproof of Thiel’s claim.

Contra Thiel, AI is not primarily a military technology, though it will have military applications. Helen Toner, an analyst at Georgetown’s Center for Security and Emerging Technology, has argued that a better comparison than the atom bomb is electricity. Is electricity a military technology? No. Does our military use electricity? Yes, of course. But it’s not primarily that the military uses electric bombs; instead, electricity affects so many of our tools, and so much about how we interact with the world, that of course it affects our military as well.

Because Thiel’s company Palantir sells the US military AI technology, some critics saw the editorial as a marketing pitch, “speaking directly to government officials responsible for billions in defense contracting” to make the case that AI is crucial military technology they need to buy from Palantir. A more generous take might be that to Thiel, the military applications and implications of AI are the most salient ones.

But to face the real challenges that will accompany emerging transformative AI, we should have a more accurate picture of what AI actually is and does. Exaggerated comparisons to the atom bomb will make it harder to reason about the real, complex challenges that AI poses. Lots of the risks from AI are not risks that the wrong people will get it first, but that even well-intentioned people will accidentally design something with unpredictable behavior once it’s sufficiently capable.

And while an AI arms race, where the US closes itself off technologically and races to achieve AI before China does, might make sense if AI were like the atom bomb, it is deeply ill-advised for a technology with significant risks from human error — and significant gains to be had from cooperation, transparency, and civilian rather than military control.

None of this, of course, should let China off the hook. The atrocities being committed against minority populations in China are horrific, and the US is not doing enough to condemn and address them. There are tools in the US’s diplomatic toolbox — offering asylum to persecuted religious minorities in China, sanctions, development of tools Chinese citizens can use to circumvent controls on their internet and on their speech — that can and should be deployed to push back against Chinese human rights abuses.

But targeting AI labs that hire Chinese nationals is the wrong way to punish China — and the wrong way to understand the implications of AI for national security.

Sign up for the Future Perfect newsletter. Twice a week, you’ll get a roundup of ideas and solutions for tackling our biggest challenges: improving public health, decreasing human and animal suffering, easing catastrophic risks, and — to put it simply — getting better at doing good.

Sourse: vox.com