Finding the best ways to do good. Made possible by The Rockefeller Foundation.

Studies show that lots of Americans are worried that AI is coming for their jobs — Uber and Lyft drivers, couriers, receptionists, even software engineers. A remarkable exhibition match today suggested that another group that should be worried is … pro video gamers?

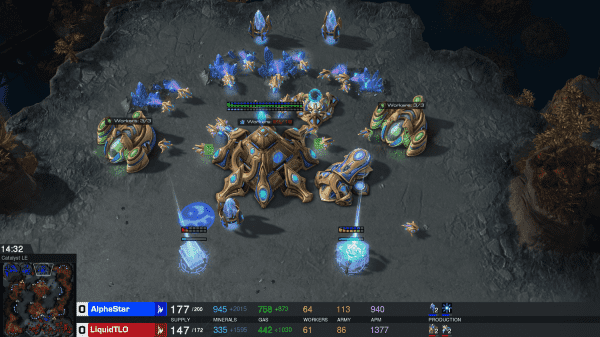

In a stunning demonstration of how far AI capabilities have come, AlphaStar — a new AI system from Google’s DeepMind — competed with pro players in a series of competitive StarCraft games. StarCraft is a complicated strategy game that requires players to consider hundreds of options at any given moment, to make strategic choices with payoffs a long way down the road, and to operate in a fast-changing environment with imperfect information. More than 200,000 games are played every day.

The demo was streamed live on YouTube and Twitch and had been highly anticipated — not just among gamers but also among AI enthusiasts — since it was announced on Tuesday.

The results were stunning: AlphaStar won all but one of the games it played.

AlphaStar’s successes astounded observers. Sure, it made mistakes, some obvious, some bizarre, but it won big anyway, taking 10 of the 11 games from the pros. While sometimes AI systems take advantage of the natural advantages of a computer — faster reaction times and more actions per minute — the DeepMind team tried to mitigate this somewhat, and AlphaStar actually had a slower-than-human reaction time and took fewer actions per minute than the pros. Instead, AlphaStar won by employing a variety of strategies, demonstrating an understanding of stealth and the scouting aspects of the game, pressing an advantage when it had one, and retreating from ill-advised fights.

This isn’t just big news for video gamers worrying about technological unemployment. It demonstrates the extraordinary power of modern machine learning techniques and confirms that DeepMind is leading its field at applying those techniques to surpass humans in surprising new ways.

StarCraft II is a vastly more complex game than chess. While AlphaStar hasn’t yet taken on the best player in the world, there are a lot of similarities here to the chess matches between IBM’s Deep Blue and Garry Kasparov, which changed what we knew computers were capable of. It’s yet another reminder that advanced AI is on the way — and we need to start thinking about what it’ll take to deploy it safely.

What games are safe from artificial intelligence?

Three years ago, DeepMind — the London AI startup that was acquired by Google and is now an independent part of Google’s parent company Alphabet — made a global splash with AlphaGo, a neural network designed to play the two-person strategy game Go. AlphaGo surpassed all human experts, demonstrating a level of Go strategy that left professional Go players astounded and intrigued.

A year later, DeepMind followed it up with AlphaZero, an improved system for learning two-player games that could be trained to excel at Go, chess, and other games with similar properties.

Chess and Go both have some specific traits that make them straightforward to approach with the same machine-learning techniques. They are two-player, perfect-information games (meaning there’s no information hidden from either player). In each round, there’s one decision to make — in chess, where to move a piece, and in Go, where to place a new one.

Modern competitive computer games like StarCraft are much, much more complicated. They typically require making many decisions at once, including decisions about where to focus your attention. They typically involve imperfect information — not knowing exactly what your opponent is doing or what you are facing next.

“These kinds of real-time strategy games are really interesting as a benchmark task for modern AI research,” Jie Tang, an AI engineer at major AI research organization OpenAI, told me. “That’s for a couple reasons. One big one is long time horizons” — the lengths of time between when you make a decision and when you see its payoff. In chess or Go, the payoff can usually be evaluated immediately by examining the board after the move, and checking if it’s a more winnable board.

In a game like StarCraft (or DOTA, which Tang works on), “you’re making ten decisions per second every second for an hour, so that’s tens of thousands of moves you need to consider. So when you think about credit assignment — why did I win this game? was it because I built workers early? — that’s a really hard problem.”

Those are features that make these games a great testing ground for AI. Deep learning systems, like the ones DeepMind excels at, need lots of data to develop their capabilities, and there’s a wealth of data about how people play. For StarCraft and StarCraft II, people have been playing online for 20 years. They represent a bigger challenge for AI than games like chess or Go, but there is enough data available to make the challenge surmountable.

For that reason, AI labs are increasingly interested in testing their creations against online games. OpenAI, where Tang works, has been playing pros in DOTA. DeepMind partnered with Blizzard Entertainment back in 2017 to make some tools available to train AIs on games like StarCraft.

Today, we saw the results.

StarCraft is a deep, complicated war strategy game. AlphaStar crushed it.

StarCraft has different game modes, but competitive StarCraft is a two-player game. Each player starts on their own base with some basic resources. They build up their base, send out scouts, and — when they’re ready — send out armies to attack the enemy base. The winner is whoever destroys all of the enemy buildings first (though it’s typical to concede the game once the outcome is obvious; all but one of the games we watched ended early).

Some StarCraft games end fast — you can build up an army early, send it out before your opponent is prepared, and crush them within five minutes. Others last for more than an hour. We watched matches where AlphaStar went for a fast, aggressive early strategy, and we watched matches which lasted significantly longer, with both players fielding large armies and advanced weapons. None of the games we watched lasted longer than about half an hour, which meant that we didn’t get the chance to see how AlphaStar handles StarCraft’s elaborate late-game — but that’s only because no one could hold AlphaStar off for long enough to make it that deep into a game.

Today, DeepMind released recordings of ten playthroughs between AlphaStar and pros that happened in secret over the last couple months, and then streamed a live game between the latest version of AlphaStar and a well-ranked pro StarCraft player.

The first five games were matches against a pro player named TLO. For those matches, DeepMind trained a series of AIs, each with a slightly different focus, for a week of real time (during which the AIs played the equivalent of up to 200 years of StarCraft) and then selected the best-performing AIs to play against the human.

With 200 years of StarCraft under its belt, AlphaStar still made some obvious mistakes. It overbuilt certain useless units and ran into bottlenecks — in one memorable match, it paraded back and forth across a choke point, pointlessly setting itself up for a counterattack, while the commentators expressed profound confusion. It didn’t use all the tools at its disposal. Nonetheless, it won every game — its tactical strengths more than compensating for its weaknesses.

After the five matches against TLO, the DeepMind team sent AlphaStar back into training. After 14 days of real-time training, the winning agents from the tournament-style training environment had the equivalent of 200 years more practice under its belt, and the difference was visible immediately. No longer did the AI make obvious tactical blunders. Its decisions still didn’t always make sense to human observers, but it was harder to identify any as obvious mistakes. It was playing a higher-rated pro — Grzegorz “MaNa” Komincz — and MaNa, unlike TLO, was playing with his favored race (StarCraft has three, and most pros specialize). Even when MaNa made no obvious blunders, he was simply outplayed, in large part thanks to the ability of the AI to split and maneuver its units with more coordination than any human could possibly manage. AlphaStar won every game again.

“It’s really interesting and really impressive,” Tang told me. “One high-level thing I was looking for was strategy versus mechanics.” That is, was the AI any good at coming up with big-picture approaches to the game, or was it just winning through masterful execution of bad strategies? AlphaStar turned out to excel on both fronts. “The high-level strategies it came up with were very similar to pro-level human play,” Tang observed. “It had perfect mechanics as well.”

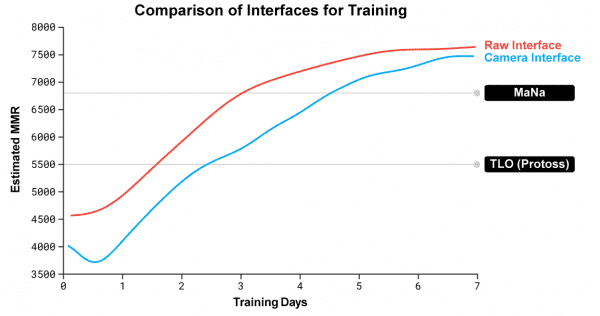

After this, DeepMind went back to the drawing board again. During the 10 matches, the AI had one big advantage that a human player doesn’t have: It was able to see all of the parts of the map where it had visibility, while a human player has to manipulate the camera.

DeepMind trained a new version of AlphaStar that had to manipulate the camera, and used the same process — 200 years of training and then selecting the best agents from a tournament. This afternoon, in a live-streamed competitive match, this new AlphaStar AI lost to MaNa — it looked significantly hampered by having to manage the camera itself, and wasn’t able to pull off as many of the spectacular strategies the other versions of AlphaStar had managed in earlier games.

The loss was probably a disappointing end to the event for DeepMind, but that AI trained for only seven days. It seems likely that when it gets the chance to train further, it’ll be back to winning. DeepMind has found that the AIs that have to managed the camera are only a little weaker, and catch up with practice.

The current models of AlphaStar certainly still have weaknesses. In fact, many of the shortcomings of the early AlphaStar AIs were reminiscent of the early matches by DeepMind’s AlphaGo. The early published versions of AlphaGo typically won, but often made mistakes that humans could identify. The DeepMind team kept improving it, and AlphaZero today doesn’t make any mistakes that a human can possibly notice.

There is clearly still room for improvement in AlphaStar’s command of StarCraft. Much of its strategic edge over humans comes from the fact that, being a computer, it’s much better at micromanaging. Its armies were gifted at outflanking and outmaneuvering human armies partially because it could direct five of them at once, which no human is capable of. There were fewer examples of tactics in these games that will see widespread adoption in pro play, because the AI mostly wasn’t succeeding by beating humans at thinking of the best tactics given human limitations — it was mostly finding tactics that leaned into its own advantages. And while the AI was technically within human range for actions-per-minute and reaction time, it still appeared to have an edge there thanks to its greater precision. It would probably be fairer to handicap AlphaStar even further.

There are a couple ways in which humans still have a substantial advantage over even the best AI. MaNa, for example, tailored his response to AlphaStar based on their original five matches, which might have given him the edge in the live event. AlphaStar can’t do that — we don’t know very much about training methods that’d let an AI learn a lot in a single game and then apply those lessons in the next game.

Nonetheless, the announcers said again and again that in many respects AlphaStar was breathtakingly humanlike. It understood how to feint, how to muster an early attack, how to respond to an ambush, how to navigate the terrain. Tang has been working on AI for games since back when it was necessary to painstakingly give the computer instructions, and he told me “it’s just really really impressive how far we’ve come since then, and the kinds of decisions that modern AI and modern reinforcement learning is capable of.”

The one thing it didn’t know how to do, in the one game it lost, was to “good game” — to concede once the game was hopeless, like human players do.

Maybe the next time we see it, it will have figured that out. Maybe, by the next time we see it, it will never need to.

Sign up for the Future Perfect newsletter. Twice a week, you’ll get a roundup of ideas and solutions for tackling our biggest challenges: improving public health, decreasing human and animal suffering, easing catastrophic risks, and — to put it simply — getting better at doing good.

Sourse: vox.com