The very confusing landscape of advanced AI risk, briefly explained.

Kelsey Piper is a senior writer at Future Perfect, Vox’s effective altruism-inspired section on the world’s biggest challenges. She explores wide-ranging topics like climate change, artificial intelligence, vaccine development, and factory farms, and also writes the Future Perfect newsletter.

In 2016, researchers at AI Impacts, a project that aims to improve understanding of advanced AI development, released a survey of machine learning researchers. They were asked when they expected the development of AI systems that are comparable to humans along many dimensions, as well as whether to expect good or bad results from such an achievement.

The headline finding: The median respondent gave a 5 percent chance of human-level AI leading to outcomes that were “extremely bad, e.g. human extinction.” That means half of researchers gave a higher estimate than 5 percent saying they considered it overwhelmingly likely that powerful AI would lead to human extinction and half gave a lower one. (The other half, obviously, believed the chance was negligible.)

If true, that would be unprecedented. In what other field do moderate, middle-of-the-road researchers claim that the development of a more powerful technology — one they are directly working on — has a 5 percent chance of ending human life on Earth forever?

In 2016 — before ChatGPT and AlphaFold — the result seemed much likelier to be a fluke than anything else. But in the eight years since then, as AI systems have gone from nearly useless to inconveniently good at writing college-level essays, and as companies have poured billions of dollars into efforts to build a true superintelligent AI system, what once seemed like a far-fetched possibility now seems to be on the horizon.

So when AI Impacts released their follow-up survey this week, the headline result — that “between 37.8% and 51.4% of respondents gave at least a 10% chance to advanced AI leading to outcomes as bad as human extinction” — didn’t strike me as a fluke or a surveying error. It’s probably an accurate reflection of where the field is at.

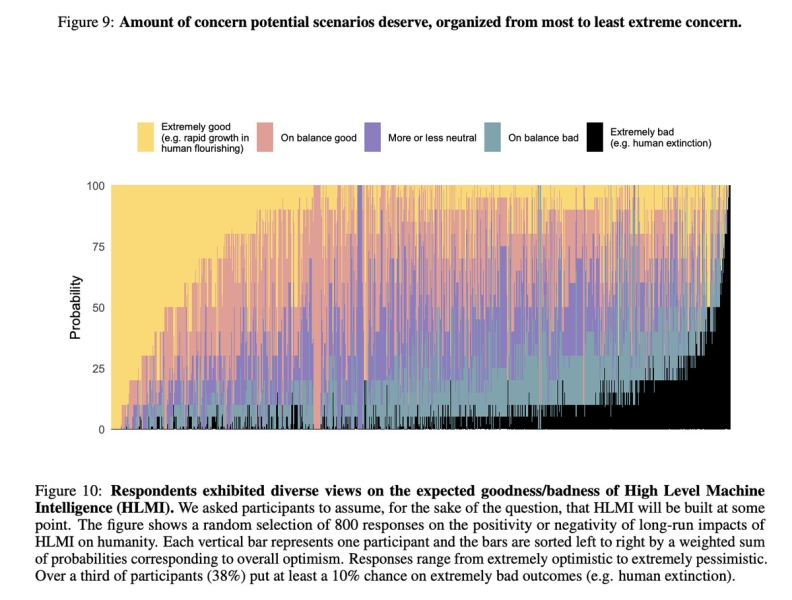

Their results challenge many of the prevailing narratives about AI extinction risk. The researchers surveyed don’t subdivide neatly into doomsaying pessimists and insistent optimists. “Many people,” the survey found, “who have high probabilities of bad outcomes also have high probabilities of good outcomes.” And human extinction does seem to be a possibility that the majority of researchers take seriously: 57.8 percent of respondents said they thought extremely bad outcomes such as human extinction were at least 5 percent likely.

This visually striking figure from the paper shows how respondents think about what to expect if high-level machine intelligence is developed: Most consider both extremely good outcomes and extremely bad outcomes probable.

As for what to do about it, there experts seem to disagree even more than they do about whether there’s a problem in the first place.

Are these results for real?

The 2016 AI impacts survey was immediately controversial. In 2016, barely anyone was talking about the risk of catastrophe from powerful AI. Could it really be that mainstream researchers rated it plausible? Had the researchers conducting the survey — who were themselves concerned about human extinction resulting from artificial intelligence — biased their results somehow?

The survey authors had systematically reached out to “all researchers who published at the 2015 NIPS and ICML conferences (two of the premier venues for peer-reviewed research in machine learning,” and managed to get responses from roughly a fifth of them. They asked a wide range of questions about progress in machine learning and got a wide range of answers: Really, aside from the eye-popping “human extinction” answers, the most notable result was how much ML experts disagreed with one another. (Which is hardly unusual in the sciences.)

But one could reasonably be skeptical. Maybe there were experts who simply hadn’t thought very hard about their “human extinction” answer. And maybe the people who were most optimistic about AI hadn’t bothered to answer the survey.

When AI Impacts reran the survey in 2022, again contacting thousands of researchers who published at top machine learning conferences, their results were about the same. The median probability of an “extremely bad, e.g., human extinction” outcome was 5 percent.

That median obscures some fierce disagreement. In fact, 48 percent of respondents gave at least a 10 percent chance of an extremely bad outcome, while 25 percent gave a 0 percent chance. Responding to criticism of the 2016 survey, the team asked for more detail: how likely did respondents think it was that AI would lead to “human extinction or similarly permanent and severe disempowerment of the human species?” Depending on how they asked the question, this got results between 5 percent and 10 percent.

In 2023, in order to reduce and measure the impact of framing effects (different answers based on how the question is phrased), many of the key questions on the survey were asked of different respondents with different framings. But again, the answers to the question about human extinction were broadly consistent — in the 5-10 percent range — no matter how the question was asked.

The fact the 2022 and 2023 surveys found results so similar to the 2016 result makes it hard to believe that the 2016 result was a fluke. And while in 2016 critics could correctly complain that most ML researchers had not seriously considered the issue of existential risk, by 2023 the question of whether powerful AI systems will kill us all had gone mainstream. It’s hard to imagine that many peer-reviewed machine learning researchers were answering a question they’d never considered before.

So … is AI going to kill us?

I think the most reasonable reading of this survey is that ML researchers, like the rest of us, are radically unsure about whether to expect the development of powerful AI systems to be an amazing thing for the world or a catastrophic one.

Nor do they agree on what to do about it. Responses varied enormously on questions about whether slowing down AI would make good outcomes for humanity more likely. While a large majority of respondents wanted more resources and attention to go into AI safety research, many of the same respondents didn’t think that working on AI alignment was unusually valuable compared to working on other open problems in machine learning.

In a situation with lots of uncertainty — like about the consequences of a technology like superintelligent AI, which doesn’t yet exist — there’s a natural tendency to want to look to experts for answers. That’s reasonable. But in a case like AI, it’s important to keep in mind that even the most well-regarded machine learning researchers disagree with one another and are radically uncertain about where all of us are headed.

A version of this story originally appeared in the Future Perfect newsletter. Sign up here!

Source: vox.com