The nuclear stakes of putting too much trust in AI.

Jeffrey Lewis is a professor at the Middlebury Institute of International Studies, where he focuses on nuclear arms control issues.

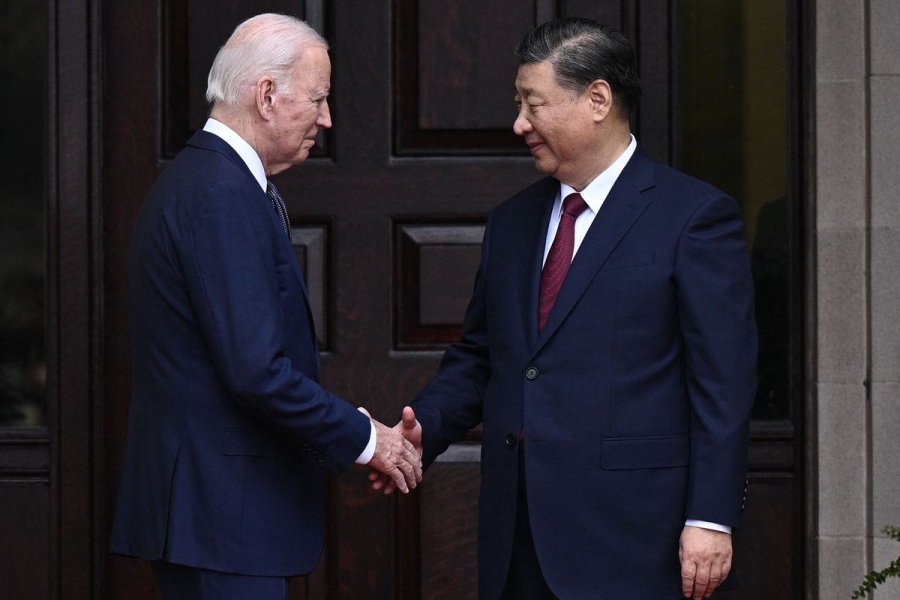

The big news from the summit between President Joe Biden and Chinese leader Xi Jinping is definitely the pandas. Twenty years from now, if anyone learns about this meeting at all, it will probably be from a plaque at the San Diego Zoo. That is, if there is anyone left alive to be visiting zoos. And, if some of us are here 20 years later, it may be because of something else the two leaders agreed to — talks about the growing risks of artificial intelligence.

Prior to the summit, the South China Morning Post reported that Biden and Xi would announce an agreement to ban the use of artificial intelligence in a number of areas, including the control of nuclear weapons. No such agreement was reached — nor was one expected — but readouts released by both the White House and the Chinese foreign ministry mentioned the possibility of US-China talks on AI. After the summit, in his remarks to the press, Biden explained that “we’re going to get our experts together to discuss risk and safety issues associated with artificial intelligence.”

US and Chinese officials were short on details about which experts would be involved or which risk and safety issues would be discussed. There is, of course, plenty for the two sides to talk about. Those discussions could range from the so-called “catastrophic” risk of AI systems that aren’t aligned with human values — think Skynet from the Terminator movies — to the increasingly commonplace use of lethal autonomous weapons systems, which activists sometimes call “killer robots.” And then there is the scenario somewhere in between the two: the potential for the use of AI in deciding to use nuclear weapons, ordering a nuclear strike, and executing one.

A ban, though, is unlikely to come up — for at least two key reasons. The first issue is definitional. There is no neat and tidy definition that divides the kind of artificial intelligence that is already integrated into everyday life around us and the kind we worry about in the future. Artificial intelligence already wins all the time at chess, Go, and other games. It drives cars. It sorts through massive amounts of data — which brings me to the second reason no one wants to ban AI in military systems: It’s much too useful. The things AI is already so good at doing in civilian settings are also useful in war, and it’s already been adopted for those purposes. As artificial intelligence becomes more and more intelligent, the US, China, and others are racing to integrate these advances into their respective military systems, not looking for ways to ban it. There is, in many ways, a burgeoning arms race in the field of artificial intelligence.

Of all the potential risks, it is the marriage of AI with nuclear weapons — our first truly paradigm-altering technology — that should most capture the attention of world leaders. AI systems are so smart, so fast, and likely to become so central to everything we do that it seems worthwhile to take a moment and think about the problem. Or, at least, to get your experts in the room with their experts to talk about it.

So far, the US has approached the issue by talking about the “responsible” development of AI. The State Department has been promoting a “Political Declaration on Responsible Military Use of Artificial Intelligence and Autonomy.” This is neither a ban nor a legally binding treaty, but rather a set of principles. And while the declaration outlines several principles of responsible uses of AI, the gist is that, first and foremost, there be “a responsible human chain of command and control” for making life-and-death decisions — often called a “human in the loop.” This is designed to address the most obvious risk associated with AI, namely that autonomous weapons systems might kill people indiscriminately. This goes for everything from drones to nuclear-armed missiles, bombers, and submarines.

Of course, it’s nuclear-armed missiles, bombers, and submarines that are the largest potential threat. The first draft of the declaration specifically identified the need for “human control and involvement for all actions critical to informing and executing sovereign decisions concerning nuclear weapons employment.” That language was actually deleted from the second draft — but the idea of maintaining human control remains a key element of how US officials think about the problem. In June, Biden’s national security adviser Jake Sullivan called on other nuclear weapons states to commit to “maintaining a ‘human-in-the-loop’ for command, control, and employment of nuclear weapons.” This is almost certainly one of the things that American and Chinese experts will discuss.

It’s worth asking, though, whether a human-in-the-loop requirement really solves the problem, at least when it comes to AI and nuclear weapons. Obviously, no one wants a fully automated doomsday machine. Not even the Soviet Union, which invested countless rubles in automating much of its nuclear command-and-control infrastructure during the Cold War, went all the way. Moscow’s so-called “Dead Hand” system still relies on human beings in an underground bunker. Having a human being “in the loop” is important. But it matters only if that human being has meaningful control over the process. The growing use of AI raises questions about how meaningful that control might be — and whether we need to adapt nuclear policy for a world where AI influences human decision-making.

Part of the reason we focus on human beings is that we have a kind of naive belief that, when it comes to the end of the world, a human being will always hesitate. A human being, we believe, will always see that through a false alarm. We’ve romanticized the human conscience to the point that it is the plot of plenty of books and movies about the bomb, like Crimson Tide. And it’s the real-life story of Stanislav Petrov, the Soviet missile warning officer who, in 1983, saw what looked like a nuclear attack on his computer screen and decided that it must be a false alarm — and didn’t report it, arguably saving the world from a nuclear catastrophe.

The problem is that world leaders might push the button. The entire idea of nuclear deterrence rests on demonstrating, credibly, that when the chips are down, the president would go through with it. Petrov isn’t a hero without the very real possibility that, had he reported the alarm up the chain of command, Soviet leaders might have believed an attack was under way and retaliated.

Thus, the real danger isn’t that leaders will turn over the decision to use nuclear weapons to AI, but that they will come to rely on AI for what might be called “decision support” — using AI to guide their decision-making about a crisis in the same way we rely on navigation applications to provide directions while we drive. This is what the Soviet Union was doing in 1983 — relying on a massive computer that used thousands of variables to warn leaders if a nuclear attack was under way. The problem, though, was the oldest problem in computer science — garbage in, garbage out. The computer was designed to tell Soviet leaders what they expected to hear, to confirm their most paranoid fantasies.

Russian leaders still rely on computers to support decision-making. In 2016, the Russian defense minister showed a reporter a Russian supercomputer that analyzes data from around the world, like troop movements, to predict potential surprise attacks. He proudly mentioned how little of the computer was currently being used. This space, other Russian officials have made clear, will be used when AI is added.

Having a human in the loop is much less reassuring if that human is relying heavily on AI to understand what’s happening. Because AI is trained on our existing preferences, it tends to confirm a user’s biases. This is precisely why social media, using algorithms trained on user preferences, tends to be such an effective conduit for misinformation. AI is engaging because it mimics our preferences in an utterly flattering way. And it does so without a shred of conscience.

Human control may not be the safeguard we would hope in a situation where AI systems are generating highly persuasive misinformation. Even if a world leader does not rely on explicitly AI-generated assessments, in many cases AI will have been used at lower levels to inform assessments that are presented as a human judgment. There is even the possibility that human decision-makers may become overly dependent on AI-generated advice. A surprising amount of research suggests that those of us who rely on navigation apps gradually lose the basic skills associated with navigation and can become lost if the apps fail; the same concern could be applied to AI, with far more serious implications.

The US maintains a large nuclear force, with several hundred land- and sea-based missiles ready to fire on only minutes’ notice. The quick reaction time gives a president the ability to “launch on warning” — to launch when satellites detect enemy launches, but before the missiles arrive. China is now in the process of mimicking this posture, with hundreds of new missile silos and new early-warning satellites in orbit. In periods of tension, nuclear warning systems have suffered false alarms. The real danger is that AI might persuade a leader that a false alarm is genuine.

While having a human in the loop is part of the solution, giving that human meaningful control requires designing nuclear postures that minimize reliance on AI-generated information — such as abandoning launch on warning in favor of definitive confirmation before retaliation.

World leaders are probably going to rely increasingly on AI, whether we like it or not. We’re no more able to ban AI than we could ban any other information technology, whether it’s writing, the telegraph, or the internet. Instead, what US and Chinese experts ought to be talking about is what sort of nuclear weapons posture makes sense in a world where AI is ubiquitous.

Source: vox.com