This story is part of a group of stories called

Uncovering and explaining how our digital world is changing — and changing us.

Facebook Messenger is rolling out a new tool to prevent scammers and imposters on its platform. The company will use artificial intelligence to help identify these potential bad actors and provide safety notices to users about messages from shady accounts. This represents the latest example of Facebook employing automation to address its myriad content moderation problems. The company also says it brings Messenger closer to being end-to-end encrypted by default.

A key detail of how this new strategy works is that Facebook is trying to limit how much its AI actually reads the content of user messages in order to identify scams. The technology is instead watching for potential abuse based on what accounts are doing.

“We may use signals [such as] user reports or reported content to inform the machine learning models,” a Facebook spokesperson told Recode, “but we don’t do so proactively, and we primarily use behavioral signals to determine when to surface a safety notice.”

For instance, it might create a notice if an account is trying to use a name that looks like one of your friends, or an account that belongs to an adult is sending a bunch of messages or friend requests to minors. As the moderation tool is not accessing message content, it’s designed to work with full encryption, which Facebook has long said will roll out on Messenger. (Facebook-owned WhatsApp is already end-to-end encrypted.)

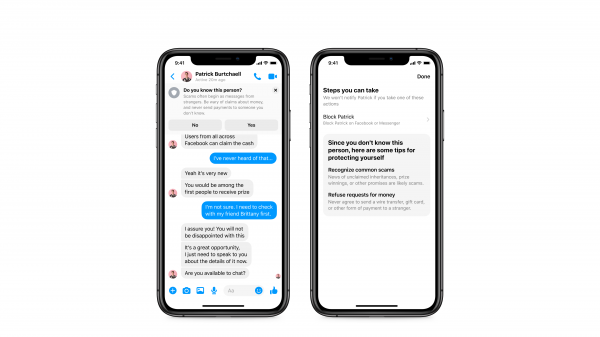

Notably, Facebook does not automatically block potentially fake or scammy accounts if they’re flagged by this new automated moderation tool. Messenger will now display a safety notice that warns the user and gives them the chance to block the person or read about how to protect themselves from scams. This strategy is not unlike Facebook’s recent move to nudge users who have liked coronavirus hoaxes.

This new tool is attempting to solve a well-documented problem on Messenger. Con artists continue to find creative and convincing ways to impersonate users’ friends and family in order to trick people into giving up their money or financial information. One of Facebook’s new safety messages even specifically warns people to “Refuse requests for money.” And more recently, those ploys have taken advantage of the Covid-19 pandemic, with scammers promoting fake treatments and charity efforts. The problem got bad enough that the Better Business Bureau issued a public warning about it.

Fakes like these are a systemic problem on Facebook’s platform. The company estimates in its own community standards enforcement report that it disabled 1.7 billion fake accounts in the first three months of 2020 alone. Facebook also estimates that about 5 percent of its monthly active users in the last quarter were fake accounts, though the company claims it catches almost all of these accounts with automation before users need to report them.

Importantly, however, this latest attempt to address its massive scam problem will not apply to misinformation, which has long festered on Facebook’s platforms. Fake news and conspiracy theories are finding new fertile ground amid the Covid-19 pandemic. Since the outbreak of the novel coronavirus, Facebook has made several policy changes in order to curb the Covid-19 “infodemic,” as Recode has noted. But again, these new safety notices do not apply to fake news and misinformation, despite its prevalence on private messaging apps like Messenger and WhatsApp.

A Facebook spokesperson told Recode in an email that it uses a “forwarded” label in Messenger to indicate when a specific message did not originate from the sender and that the company is “exploring more strict forward limits.” For at least a couple of years, Facebook has gone even further by limiting forwarding on its messaging platforms. The company employed this method to fight fake news after violence in India and Myanmar a few years ago, and more recently claimed that limiting forwarding cut down on the spread of viral messages in the midst of the coronavirus pandemic by 70 percent. It’s unclear if these messages actually contained fake news, however.

Related

Coronavirus scammers are flooding social media with fake cures and tests

The new safety notices also represent yet another attempt by Facebook to use automation to curb its toughest content challenges, which are significant. Facebook has already employed AI in its efforts to solve problems as wide-ranging as terrorist propaganda and recruitment to illegal opioid sales, though none of those abuses have completely gone away. And most recently, when the pandemic forced the company’s human reviewers from their offices, Facebook again turned to its AI-powered content moderation technology, though it warned that the technology would be less than ideal.

Ultimately, time will tell whether the new safety alerts in Messenger will cut down on scams — and there’s no reason to scoff at the technology’s power to quickly flag large amounts of content to give users a heads up that something might be fishy. Scammers invariably tend to find workarounds, so the technology will continue to evolve. And again, at the end of the day, it’s up to the user to notice the alert and do something about it.

The feature has been rolling out on Android devices since March, and Facebook says it has been flagging potentially concerning messages for more than 1 million people a week. Starting next week, the feature will start to be expanded to iOS users.

Open Sourced is made possible by Omidyar Network. All Open Sourced content is editorially independent and produced by our journalists.

Support Vox’s explanatory journalism

Every day at Vox, we aim to answer your most important questions and provide you, and our audience around the world, with information that has the power to save lives. Our mission has never been more vital than it is in this moment: to empower you through understanding. Vox’s work is reaching more people than ever, but our distinctive brand of explanatory journalism takes resources — particularly during a pandemic and an economic downturn. Your financial contribution will not constitute a donation, but it will enable our staff to continue to offer free articles, videos, and podcasts at the quality and volume that this moment requires. Please consider making a contribution to Vox today.

Sourse: vox.com