In 2018, psychology PhD student William McAuliffe co-published a paper in the prestigious journal Nature Human Behavior. The study’s conclusion — that people become less generous over time when they make decisions in an environment where they don’t know or interact with other people — was fairly nuanced.

But the university’s press department, perhaps in an attempt to make the study more attractive to news outlets, amped up the finding. The headline of the press release heralding the publication of the study read “Is big-city living eroding our nice instinct?”

From there, the study took on a new life as stories in the press appeared with headlines like “City life makes humans less kind to strangers.”

This interpretation wasn’t correct: The study was conducted in a lab, not a city. And it measured investing money, not overall kindness.

But what was most frustrating to McAuliffe was that the error originated with his own university’s press department. The university, he says, got the idea from a comment from one of the study authors “about how our results are consistent with an old idea that cities are less hospitable … than rural areas.”

It didn’t matter that the text of the press release got the study details right. “I even had radio stations call me, disappointed to learn that our study had not been a field study comparing behavior in cities to rural areas,” he says.

This story will be familiar to many scientists. This is a big, stubborn problem in how science gets communicated. It’s infuriating to researchers to see their work distorted and overhyped. It makes researchers distrust the media.

Frequently, stories about scientific research declare an exciting new treatment works but fail to mention the intervention was performed on mice. Or stories that breathlessly report what the latest study finds on the health benefits (or risks) of coffee, without assessing the weight of the available evidence.

The truth is, a lot of these misconceptions, start as the one around McAuliffe’s study did: with the university press release. But here, there is hope: Even though a lot of hyped-up science may start from university press releases, new research finds that press releases may be a powerful tool to inoculate reporters against hyped-up claims.

Many journalists just follow the lead of press releases

To be honest, the research on how scientific press releases translate to press coverage doesn’t make my profession look all that good. It suggests that we largely just repeat whatever we’re told from the press releases, for good or for bad. It’s concerning. If we can’t evaluate the claims of press releases, how can we evaluate the merits of studies (which aren’t immune to shoddy methods and overhyped findings themselves)?

A 2014 correlational study found that when press releases exaggerate findings, the news articles that follow are more likely to contain exaggerations as well. My colleague Julia Belluz wrote about it at the time. Basically, it found that a lot of science journalism just parroted the (bad) claims written about in press releases:

The difference between what scientists report in the studies and what journalists report in their articles can look like a game of broken telephone. A study investigating the neural underpinnings of why shopping is joyful get garbled into a piece about how your brain thinks shopping is as good as sex. A study exploring how dogs intuit human emotions, becomes “Our dogs can read our minds.”

But here’s a key thing: Scientists and universities can ensure the first line in the telephone chain is loud and clear.

The 2014 study “was a real wake-up call,” Chris Chambers, a professor of cognitive neuroscience at Cardiff University and co-author on that paper, says. “We thought, okay, hang on a second, if exaggeration is originating in the press release, and if the press release is under the control of the scientist who signs it off, then it’s rather hypocritical for the scientist to then be turning around and saying, ‘Hey, reporter, you exaggerated my research,’ or, ‘You got it wrong.’”

Recently, Chambers and colleagues revisited the topic, with a new study published in BMC Medicine. It too finds evidence that when university press releases are made clearer, more accurate, and free of hype, science news reported by journalists gets better as well.

What’s more, the study found that more accurate press releases don’t receive less coverage, but the coverage they do receive tends to be more accurate.

“The main message of our paper is that you can have more accurate press releases without reducing news uptake, and that’s good news,” says Chambers.

In the new paper, he and his team actually did an experiment: Working with nine universities press offices in the UK, the research team intercepted 312 press releases before they were sent out to the press. The press releases were randomized to receive an intervention or not, with the intervention consisting of Chambers’s team jumping in to make sure the content of the press releases accurately reflected the scientific study. For instance, they made sure the claims in the press release emphasized that the study was correlational and could not imply causation.

On Twitter, I asked scientists to give me some examples of research results they felt were misinterpreted by the press. Here’s a particularly alarmist headline one researcher sent me, from the Daily Mail: “Are smartphones making us STUPID? ‘Googling’ information is making us mentally lazy, study claims.”

This seems concerning, right? Who wants to be made less intelligent by their smartphone?

But the study in the story was based on a correlational study. No causal claims can be made from it. The study authors even wrote that “the results reported herein should be considered preliminary” in explaining the limitations of the work.

Yes, the Daily Mail ought to have read this section of the study. Still, where did it get the idea from in the first place?

Perhaps it was the press release, announcing the publication of the study. That release had the headline “Reliance on smartphones linked to lazy thinking.” The word “linked” Chambers says, suggests causality, and ought to be tweaked to “Reliance on smartphones is associated with lazy thinking,” or something similar.

Chambers and his team made such tweaks in their study, but they ran into a problem. There’s something called the Hawthorne effect, which means when somebody knows they’re being studied, they act on their best behavior.

Many of the press releases that Chambers and his team got already looked pretty good. They didn’t end up tweaking as many studies as they’d like to for a pure experiment. Long story short: Their results here are correlational, not causational, as they ended up lumping together the experimentally manipulated press releases and the ones that were already good in the same analysis.

Still, it comes to a conclusion that ought to be obvious: When universities put forth good, unhyped information, unhyped news follows. And perhaps more importantly, the researchers didn’t find evidence that these more careful press releases get less news coverage. Which should send a message: Universities don’t need to hype findings to get coverage.

Misinformation is a huge problem online, scientific or not. Everyone needs to guard against it.

What does it matter if a few science news headlines get hyped up? Will uncautious stories about the cancer risk of drinking wine really cause trouble?

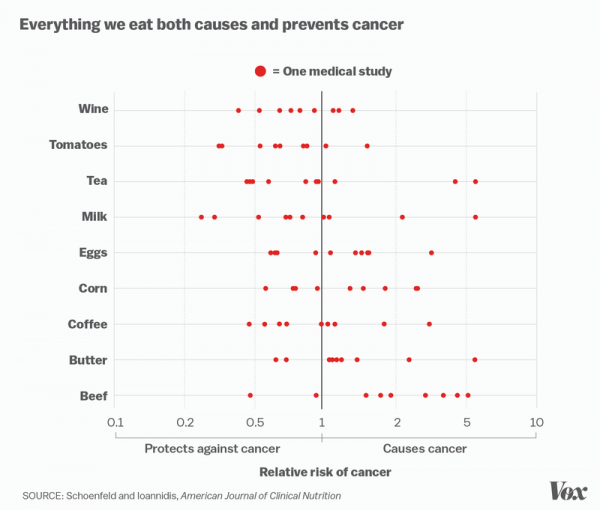

“We argue that’s bad because it risks undermining trust in science generally,” Chambers says. “What you end up with is with 50 articles in the Daily Mail saying that caffeine causes cancer and 50 saying that it cures cancer. People read this stuff and they go, ‘We don’t know anything!’ So it just adds a lot of uncertainty and a lot of noise to public understanding of science.”

And if there’s a lesson in the past few years of “fake news,” it’s this: You don’t need to infect many people with a bad idea to cause trouble. Just look at the uptick in measles cases in the US. Most people do vaccinate their kids. But a few, who have absorbed shoddy science, have caused a lot of trouble. Same goes for the spreading of conspiracy theories. You don’t need a lot of people believing in something wrong to cause harm. Just look at what happened with “Pizzagate.” False stories often move through the internet faster than the truth and are incredibly difficult to correct after they are unleashed.

“I think what we need is to establish that the responsibility [to be accurate] lies with everyone,” Chambers says. “The responsibility lies with the scientists to ensure that the press release is as accurate as possible. The responsibility lies with the press officer to ensure that they listen to the scientist. And then the science journalists need to be responsible for making sure they read the original article to the best of their ability and deflate exaggeration as much as possible that might persist despite all of our best efforts.”

As a science journalist, I’ve really appreciated it when academics have gone above and beyond to try to communicate tricky findings to the public. One of the best examples of this: Last year, when a huge, easy-to-misunderstand paper linked genetics to educational success, the researchers wrote an enormous FAQ, written in plain language, getting ahead of misconceptions. The FAQ was possibly longer than the scientific paper itself. In my interviews with researchers, I try to ask a version of the question: “What are the wrong conclusions to draw from this study?” Press releases, and other science communications from universities, could do better to include similar disclaimers in plain language.

Scientists also don’t have to go through their press departments to get their work to the public. Some of my favorite stories have come from researchers emailing me directly about their work, or work from their peers.

Chambers says scientists often don’t have incentives to make sure their work is well communicated in the press. But perhaps that should change.

“I think researchers have a duty to figure out how to translate their findings to the general public, who in many cases paid for the work with their tax dollars and thus deserve some sort of benefit,” McAuliffe says.

As for science journalists, I’ll speak for myself: I’ll try not to mess it up too bad.

Sourse: vox.com