Shadowbanning and the Israel-Hamas war, explained.

A.W. Ohlheiser is a senior technology reporter at Vox, writing about the impact of technology on humans and society. They have also covered online culture and misinformation at the Washington Post, Slate, and the Columbia Journalism Review, among other places. They have an MA in religious studies and journalism from NYU.

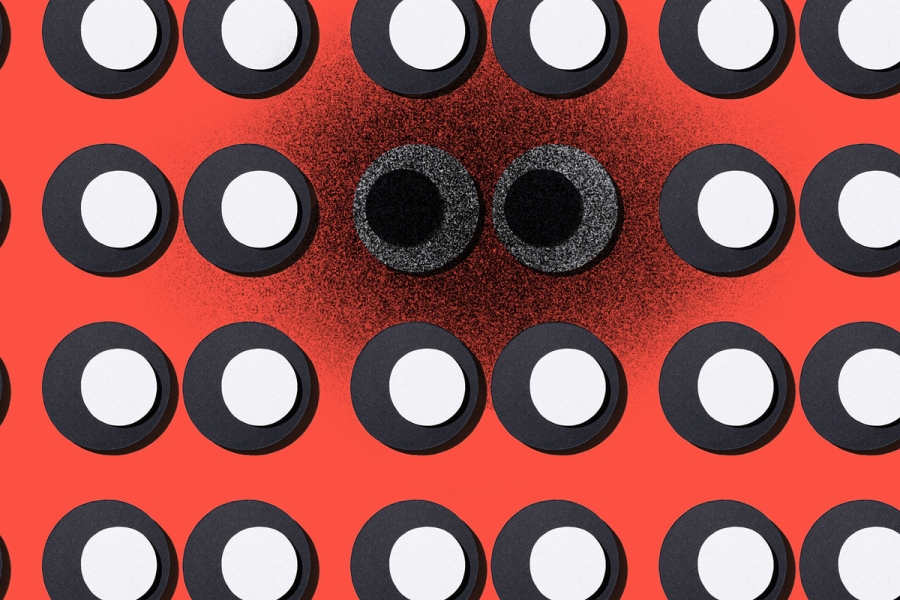

“Algospeak” is an evasion tactic for automated moderation on social media, where users create new words to use in place of keywords that might get picked up by AI-powered filters. People might refer to dead as “unalive,” or sex as “seggs,” or porn as “corn” (or simply the corn emoji).

There’s an algospeak term for Palestinians as well: “P*les+in1ans.” Its very existence speaks to a concern among many people posting and sharing pro-Palestine content during the war between Hamas and Israel that their posts are being unfairly suppressed. Some users believe their accounts, along with certain hashtags, have been “shadowbanned” as a result.

Algospeak is just one of the user-developed methods of varying effectiveness that are supposed to dodge suppression on platforms like TikTok, Instagram, and Facebook. People might use unrelated hashtags, screenshot instead of repost, or avoid employing Arabic hashtags to attempt to evade apparent but unclear limitations on content about Palestine. It’s not clear whether these methods really work, but their spread among activists and around the internet speaks to the real fear of having this content hidden from the rest of the world.

“Shadowbanning” gets thrown around a lot as an idea, is difficult to prove, and is not always easy to define. Below is a guide to its history, how it manifests, and what you as a social media user can do about it.

What is shadowbanning?

Shadowbanning is an often covert form of platform moderation that limits who sees a piece of content, rather than banning it altogether. According to a Vice dive into the history of the term, it likely originates as far back as the internet bulletin board systems of the 1980s.

In its earliest iterations, shadowbanning worked kind of like a digital quarantine: Shadowbanned users could still log in and post to the community, but no one else could see their posts. They were present but contained. If someone was shadowbanned by one of the site’s administrators for posting awful things to a message board, they’d essentially be demoted to posting into nothingness, without knowing that was the case.

Social media, as it evolved, upended how communities formed and gathered online, and the definition of shadowbanning expanded. People get seen online not just by creating an account and posting to a community’s virtual space, but by understanding how to get engagement through a site’s algorithms and discovery tools, by getting reshares from influential users, by purchasing ads, and by building followings of their own. Moderating became more complicated as users became savvier about getting seen and working around automated filters.

At this point, shadowbanning has come to mean any “really opaque method of hiding users from search, from the algorithm, and from other areas where their profiles might show up,” said Jillian York, the director for international freedom of expression for the Electronic Frontier Foundation (EFF). A user might not know they’ve been shadowbanned. Instead, they might notice the effects: a sudden drop in likes or reposts, for instance. Their followers might also have issues seeing or sharing content a shadowbanned account posts.

If you’re from the United States, you might know shadowbanning as a term thrown around by conservative activists and politicians who believe that social media sites — in particular Facebook and Twitter (now X) — have deliberately censored right-wing views. This is part of a years-long campaign that has prompted lawsuits and congressional hearings.

While the evidence is slim that these platforms were engaging in systemic censorship of conservatives, the idea seems to catch on any time a platform takes action against a prominent right-wing account with a large following. The Supreme Court recently agreed to hear a pair of cases challenging laws in Texas and Florida that restrict how social media companies can moderate their sites.

Why are people concerned about shadowbanning in relation to the Israel-Hamas war?

War produces a swell of violent imagery, propaganda, and misinformation online, circulating at a rapid pace and triggering intense emotional responses from those who view it. That is inevitable. The concern from activists and digital rights observers is that content about Palestinians is not being treated fairly by the platforms’ moderation systems, leading to, among other things, shadowbanning.

Outright account bans are pretty visible to both the account holder and anyone else on the platform. Some moderation tools designed to combat misinformation involve publicly flagging content with information boxes or warnings. Shadowbanning, by comparison, is not publicly labeled, and platforms might not tell a user that their account’s reach is limited, or why. Some users, though, have noticed signs that they might be shadowbanned after posting about Palestine. According to Mashable, these include Instagram users who saw their engagement crater after posting with their location set to Gaza in solidarity, sharing links to fundraisers to help people in Palestine, or posting content that is supportive of Palestinians.

Some digital rights organizations, including the EFF and 7amleh-The Arab Center for Social Media Advancement, are actively tracking potential digital rights violations of Palestinians during the conflict, particularly on Instagram, where some Palestinian activists have noticed troubling changes to how their content circulates in recent weeks.

“These include banning the use of Arabic names for recent escalations [i.e., the Israel-Hamas war] while allowing the Hebrew name, restricting comments from profiles that are not friends, and…significantly reducing the visibility of posts, Reels, and stories,” Nadim Nashif, the co-founder and director of 7amleh, wrote in an email to Vox.

In a statement, Meta said that the post visibility issues impacting some Palestinian users were due to a global “bug” and that some Instagram hashtags were no longer searchable because a portion of the content using it violated Meta rules. Meta’s statement does not name specific hashtags that have been limited under this policy.

Mona Shtaya, a Palestinian digital rights activist, took to Instagram to characterize the hashtag shadowbans as a “collective punishment against people who share political thoughts or document human rights violations” that will negatively impact efforts to fact-check and share accurate information about the situation in Gaza.

What’s the difference between shadowbanning and moderation bias?

Shadowbanning is just one aspect of a broader problem that digital rights experts are tracking when it comes to potential bias in the enforcement of a platform’s rules. And this is not a new issue for pro-Palestinian content.

Moderation bias “comes in a lot of different flavors, and it’s not always intentional,” York said. Platforms might underresource or incorrectly resource their language competency for a specific language, something that York said has long been an issue with how US-based platforms such as Meta moderate content in Arabic. “There might be significant numbers of Arabic-language content moderators, but they struggle because Arabic is across a number of different dialects,” she noted.

Biases also emerge in how certain terms are classified by moderation algorithms. We know that this specific sort of bias can affect Palestinian content because it happened before. In 2021, during another escalation in conflict between Hamas and Israel, digital rights groups documented hundreds of content removals that seemed to unfairly target pro-Palestine sentiments. Meta eventually acknowledged that its systems were blocking references to the al-Aqsa Mosque, a holy site for Muslims that was incorrectly flagged in Meta’s systems as connected to terrorist groups.

Meta commissioned an independent report into its moderation decisions during the 2021 conflict, which documented Meta’s weaknesses in moderating Arabic posts in context. It also found that Meta’s decisions “appear to have had an adverse human rights impact” on the rights of Palestinian users’ “freedom of expression, freedom of assembly, political participation, and non-discrimination.”

In response to the report, Meta promised to review its relevant policies and improve its moderation of Arabic, including by recruiting more moderators with expertise in specific dialects. Meta’s current moderation of Israel-Hamas war content is being led by a centralized group with expertise in Hebrew and Arabic, the company said. Some content removals, they added, are going through without account “strikes” to avoid automatically banning accounts that have had content taken down in error.

What other content gets shadowbanned?

Claims of shadowbanning are associated with divisive issues. But probably the best-documented cases have to do with how major platforms handle content about nudity and sex. Sex workers have long documented their own shadowbans on mainstream social media platforms, particularly after a pair of bills passed in 2018 aimed at stopping sex trafficking removed protections for online platforms that hosted a wide range of content about sex.

In general, York said, shadowbans become useful moderation tools for platforms when the act of directly restricting certain sorts of content might become a problem for them.

“They don’t want to be seen as cutting people off entirely,” she said. “But if they’re getting pressure from different sides, whether it’s governments or shareholders or advertisers, it is probably in their interest to try to curtail certain types of speech while also allowing the people to stay on the platform so it becomes less of a controversy.”

TikTok content can also get shadowbanned, according to its community guidelines, which note that the platform “may reduce discoverability, including by redirecting search results, or making videos ineligible for recommendation in the ‘For You’ feed” for violations. Other platforms, like Instagram, YouTube, and X, have used tools to downrank or limit the reach of “borderline” or inappropriate content, as defined by their moderation systems.

While it’s very difficult — if not impossible — to prove shadowbanning unless a company decides to confirm that it happened, there are some documented cases of the biases inherent within these moderation systems that, while not quite fitting the definition of shadowbanning, might be worth considering while trying to evaluate claims. TikTok had to correct an error in 2021 that automatically banned creators from using phrases like “black people” or “black success” in their marketing bios for the platform’s database of creators who are available to create sponsored content for brands. In 2017, LGBTQ creators discovered that YouTube had labeled otherwise innocuous videos that happened to feature LGBTQ people in them as “restricted content,” limiting their viewability.

How can you tell if your account has been shadowbanned?

This can be difficult! “I do feel like people are often gaslighted by the companies about this,” said York. Many platforms, she continued, “won’t even admit that shadowbanning exists,” even if they use automated moderation tools like keyword filters or account limitations that are capable of creating shadowbans. And some of the telltale signs of shadowbanning — lost followers, a drop in engagement — could be explained by an organic loss of interest in a user’s content or a legitimate software bug.

Some platform-specific sites promise to analyze your account and let you know if you’ve been shadowbanned, but those tools are not foolproof. (You should also be careful about giving your account information to a third-party site.) There is one solution, York said: Companies could be more transparent about the content they take down or limit, and explain why.

Finding good information about a conflict is already difficult. This is especially true for those trying to learn more about the Israel-Hamas war, and in particular, about Gaza. Few journalists have been able to do on-the-ground reporting from Gaza, making it extremely difficult to verify and contextualize the situation there. According to the Committee to Protect Journalists, as of this writing, 29 journalists have died since the war began. Adding the specter of shadowbans to this crisis of reliable information threatens yet another avenue for providing and amplifying firsthand accounts to a wider public.

Source: vox.com