Clean energy technologies threaten to overwhelm the grid. Here’s how it can adapt.

The centralized, top-down power grid is outdated. Time for a bottom-up redesign.

By

David Roberts@drvox

Updated

Dec 1, 2018, 10:16am EST

Graphics: Javier Zarracina

Share

Tweet

Share

Share

Clean energy technologies threaten to overwhelm the grid. Here’s how it can adapt.

tweet

share

The US power grid is, by some estimates, the largest machine in the world, a continent-spanning wonder of the modern age. And despite its occasional well-publicized failures, it is remarkably reliable, delivering energy to almost every American, almost every second of every day.

This is an especially remarkable accomplishment given that, until very recently, almost none of that power could be stored. It all has to be generated, sent over miles of wires, and delivered to end users at the exact second they need it, in a perfectly synchronized dance.

Given the millions of Americans, their billions of electrical devices, and the thousands of miles of electrical wires involved, well, it’s downright amazing.

Still, as you may have heard, the grid is stressed out. Blackouts due to extreme weather (hurricanes, floods, wildfires) are on the rise, in part due to climate change, which is only going to get worse. The need for local resilience in the face climate chaos is growing all the time.

What’s more, the energy world is changing rapidly. A system designed around big, centralized power plants and one-way power flows is grinding against the rise of smarter, cleaner technologies that offer new ways to generate and manage energy at the local level (think solar panels and batteries).

Unless old systems are reconceived and redesigned, they could end up slowing down, and increasing the cost of, the transition to clean electricity (and hampering the fight against climate change).

Energy professionals are aware that strains are starting to show. Energy sector reform is all the buzz these days, with active discussions and experimentation around rate design, market reforms, subsidies, regulations, and legislative targets.

But according to Lorenzo Kristov, the rise of new energy technologies should occasion a step back and a fresh, holistic perspective — not just a reactive scramble on policy. Now in private practice as an energy consultant, Kristov saw the challenges facing the grid up close as a longtime principal at the California Independent System Operator (CAISO), which runs California’s electricity grid.

“As these devices — generators, storage, and controls — get cheaper and more powerful,” he says, “every end-use customer will be able to get a major portion of their energy on-site or in the community. That touches every level of the electric system.”

Stepping back and thinking about the grid at the systems level, in terms of its key actors and functions, is the province of a discipline known as “grid architecture.”

Now, I grant you, “grid architecture” is not a term designed to set the heart aflame. But it is extremely important, and the stakes are high. The danger is that policymakers will back into the future, reacting to one electricity crisis at a time, until the growing complexity of the grid tips it over into some kind of breakdown. But if they think and act proactively, they can get ahead of the burgeoning changes and design a system that harnesses and accelerates them.

Now is the time to rethink the system from the ground up.

What is grid architecture?

The Pacific Northwest National Laboratory has a grid architecture center that offers some semi-useful definitions. A system architecture is “the conceptual model that defines the structure, behavior, and essential limits of a system.” Grid architecture is “the application of system architecture, network theory, and control theory to the electric power grid.”

Yes, I realize that’s not entirely clear. Think of it this way: Grid architecture offers the conceptual tools needed to reshape the structure of the grid system so that it can better accommodate disruptive ongoing changes, i.e., the shift from centralized power plants and one-way power flows to massive amounts of small-scale resources at the edge of the grid.

The system’s structure determines its properties and behaviors — what it is capable of, what types of change it welcomes or resists, what outcomes it can achieve, and what conditions could push it into failure. It is at the structural level that reform is needed.

Tell you what, let’s just jump in. Like many concepts in energy, grid architecture makes more sense when you look at the specifics. So I’m going to describe (with illustrations from Vox’s inimitable Javier Zarracina) the current architecture of the grid, reasons to think it needs reform, and a proposal for a new architecture.

Actually, there are two opposing proposals, one that doubles down on the current, top-down system and one — more ambitious, but to my mind far superior — that would redesign the grid system around a new bottom-up paradigm.

If nothing else, I hope to convince you that changing the way we architect the grid is a key step — perhaps the key step — in unlocking the full potential of the clean energy technologies that will be needed to decarbonize the electricity sector and meet new demand coming from electrification of other energy-intensive sectors like transportation and buildings.

And as I’ve written before, decarbonizing the electricity sector is central to addressing climate change. Getting the grid right is vitally important. So let’s have a look.

The grid has worked on a top-down model for a century

Since it first started growing in earnest in the early 20th century, the grid has worked according to the same basic model. Power is generated at large power plants and fed into high-voltage transmission lines, which can carry it over long distances. At various points along the way, power is dumped from the transmission system into local distribution areas (LDAs) via substations, where transformers lower the voltage so it won’t fry the locals.

Distribution wires carry power from these transmission-distribution (TD) interfaces in various directions to end users. The voltage is lowered again by transformers on power poles, and then the power is fed into buildings through meters that keep track of consumption. Once it is “behind the meter,” it is used by computers and dishwashers and iPhone chargers.

One notable feature of this model is that power travels in only one direction, which is why hydrological metaphors are so popular in grid explainers. Transmission lines are like mighty rivers that feed into urban water distribution systems, where the water/power travels to the end of the line and is consumed. At no point does water travel back up the line.

While the US transmission system acts as a true network — it is highly interlinked, so power can travel throughout to where it is needed — the “distribution feeders” that pump power into LDAs do not. Distribution feeders are generally “radial” in design, meaning power travels from the substation out along tendrils to end users, in one direction. (There are also other distribution feeder designs, wherein an LDA is linked up to two or more substations, but those are less common, so we’re going to keep it simple.)

It is important to understand how these various parts of the grid are managed in the US. Unfortunately, that means I’m about to hit you with a hail of acronyms. Brace yourself.

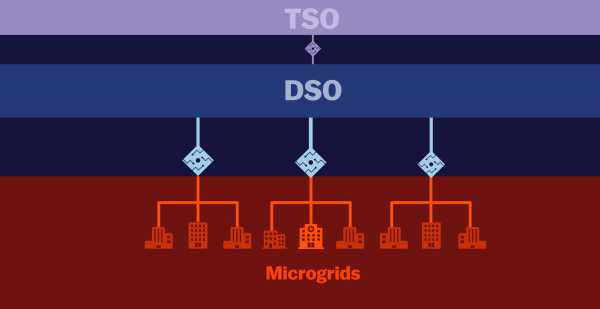

The transmission network is managed by, depending on the region, an independent system operator (ISO), a regional transmission organization (RTO), or an electric utility that is not a member of an ISO or RTO. (All of these are versions of transmission system operators — TSOs, the generic term popular in Europe — so for the rest of this post, and in the illustrations, I’ll use that term.)

Because transmission crosses state lines, TSOs are under federal jurisdiction. Specifically, they must follow rules established by the Federal Energy Regulatory Commission (FERC). FERC is responsible for the reliability of the transmission grid, with help from the North American Electric Reliability Corporation (NERC), a nonprofit public-benefit corporation that analyzes grid reliability and enforces reliability standards.

In some regions, utilities are still “vertically integrated,” meaning they own power plants and are also “load serving entities” (LSEs), distributing power locally. But in areas serving about two-thirds of US customers, the utility sector has been “restructured,” splitting the two apart. (This post mostly focuses on restructured areas, though it applies beyond them as well.)

In restructured regions, distribution utilities do not own any power plants. They buy power for their local customers from wholesale markets, where the owners of power generators (“gencos”) compete, selling their power (and other energy services) at auction. Wholesale power markets are administered by TSOs and under FERC jurisdiction.

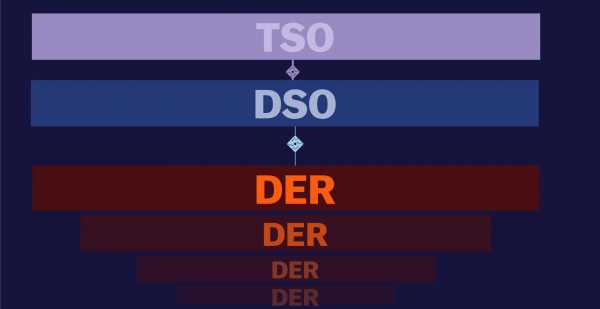

Distribution systems, because they generally do not cross state lines, are under state jurisdiction. They are the responsibility of power utilities, the state public utility commissions (PUCs) that oversee utilities, and the state legislators who pass laws utilities have to follow. (Municipal utilities and electric cooperatives also operate distribution systems, subject to local governing bodies rather than state commissions.) These utilities are responsible for the reliability of distribution systems. They act as distribution system operators (DSOs).

Still with me? On the transmission side, TSOs watch over wholesale markets, regulated by FERC and guided by NERC. On the distribution side, DSOs provide connections to end-use customers and are regulated by state legislatures and state PUCs or local governing bodies.

So here’s that model again:

That’s the basic grid architecture as it has existed since time immemorial.

But in the past few decades, things have started changing.

Three clean-energy trends are shaking things up

Changes in the electricity world are many and varied, but they boil down to three core trends.

The first is the rise of renewables. Wind and solar complicate management of the grid because they are variable — they come and go with the weather. You can’t ramp them up and down at will like you can fossil fuel plants. The sun comes up, you get a flood of power from all those solar panels; the sun goes down, you get none.

This vastly increases the complexity of matching supply to demand in real time, and creates an urgent need for flexibility. A grid with lots of renewables badly needs resources that can ramp up and down or otherwise compensate for their natural variations. Integrating high levels of variable renewables is already creating challenges for grids like California’s.

The second is the rise of distributed energy resources (DERs): small-scale energy resources often (though not always) found “behind the meter,” on the customer side. Some DERs generate energy, like solar panels, small wind turbines, or combined heat-and-power (CHP) units.

Some DERs store energy, like batteries, fuel cells, or thermal storage like water heaters. And some DERs monitor and manage energy, like smart thermostats, smart meters, smart chargers, and whole-building energy management systems. (The oldest and still most common DER is diesel generators, which are obviously not ideal from a climate standpoint.)

DERs are sometimes known as “grid edge” technologies because they exist at the bottom edge of the grid, near or behind customer meters. They are rapidly growing in variety, sophistication, and cumulative scale, and as they do, they unlock opportunities to stitch together more locally sufficient energy networks — if grids can handle them. (More on that later.)

The third trend is the increasing sophistication and declining cost of information and communication technology (ICT). As sensors and processors continue to get cheaper, it is increasingly possible to see exactly what is going on in a distribution grid down to the individual device, and to share that knowledge in real-time over the web. More information can be generated, and with artificial intelligence and machine learning, information and energy can both be more intelligently managed.

If the first trend, the rise of renewable energy production, creates the need for grid flexibility, the second two, DERs and ICT, can help provide that flexibility — if they are enabled and encouraged.

But there’s reason to believe that current grid architecture is not well-suited to enabling and encouraging them.

DERs are getting all up in wholesale energy markets

The simple fact is that DERs can do a lot of the things that only big conventional power plants used to be able to do, like generate energy and provide grid services like capacity, voltage and frequency regulation, and “synthetic inertia.” They can also do things power plants can’t, like store energy and economize its use.

That means DERs can increasingly help smooth out the variations in demand and renewable energy production locally, without calling on distant power plants.

With new ICT, it is possible to network DERs together into big operational chunks — “virtual power plants” (VPPs) they are sometimes called, though that’s a bit misleading, since they can do things normal power plants can’t do. Virtual power plans are assembled by “aggregators.” It is a rapidly growing market.

There are also physical aggregations of DERs, known as microgrids, local electricity systems that can operate either connected to the main power system or, at least temporarily, as an “island,” disconnected from it.

Microgrids can do many of the same things as virtual power plans, and as a bonus, they also provide their residents with backup power service in case of a blackout. (Fun fact: One of the biggest microgrids in the US is a literal island — it runs Alcatraz, off the coast of San Francisco.)

Now here’s where things get tricky for the old grid model. There are all these new DERs, more every day, interoperating in increasingly sophisticated ways. They can produce power and services, not only for the customers whose meters they are behind, but for the grid as a whole.

But the physical grid, DSOs, TSOs, and current regulatory structures were all designed for one-way power flows. How can the value of the power and services DERs provide be fully realized? For instance — who should DERs sell their services to?

Remember, almost all US electricity markets are run at the transmission level, by TSOs. DERs are hanging out down at the bottom edge of the grid, under the aegis of DSOs.

The solution thus far, such as it is, has been to allow DERs some limited access to wholesale power markets. Aggregators bundle up the power and services and bid them into those markets.

So here’s the model now, with power flowing down to the edge of the grid and then, from DERs, flowing back up into wholesale markets:

The question now is whether, given the continued development and profusion of DERs, the existing grid architecture can keep pace.

Two contrasting visions of the future electricity grid

The electricity sector is changing rapidly and the grid is changing with it. That will continue no matter what. The question is whether to reinforce and enhance the current grid architecture or to conceive and build something new.

That choice is laid out in “A Tale of Two Visions: Designing a Decentralized Transactive Electric System,” published in 2016 in IEEE Power and Energy Magazine by Kristov, Paul De Martini of the California Institute of Technology, and Jeffrey Taft of the Pacific Northwest National Laboratory.

Kristov, De Martini, and Taft sketch two ways that the profusion of DERs can be managed, involving different roles for TSOs and DSOs. They purposefully describe two opposing poles, two contrasting extremes, acknowledging that in the real world many systems will be some mix, or may change incrementally and slowly from one to another.

The first vision is the logical extension of the current wholesale market system — just with a lot more DERs involved. The study’s authors call this the “Grand Central Optimization” model, because all optimization, all balancing of supply and demand, would be done in one place, the TSO. It is a “total TSO” model.

Under the Grand Central model, TSOs would continue to manage and dispatch DERs (or aggregations of DERs) for any transactions affecting wholesale markets. Wholesale markets would become much more complex, involving many more diverse participants.

This would be a “minimal DSO” model, in that the DSO, typically a distribution utility, would remain uninvolved in such transactions and continue merely to maintain operations and reliability at the distribution level.

Here’s how Grand Central might look, with lots and lots of DERs feeding energy and services directly into wholesale markets from down at the grid edge:

This is more or less where the system is heading by default, unless something big changes. But the evolution seems less intentional than a matter of path dependence and lack of holistic planning.

Kristov, De Martini, and Taft worry that Grand Central is not the right model — that it will ultimately increase the cost and complexity of integrating more renewable energy and DERs.

The details get can get technical, but there are two basic problems with Grand Central.

The first is that DERs more and more often serve two masters. They have a relationship with the TSO that bypasses the DSO, in the form of wholesale-market commitments. They also have a relationship with the DSO; it must manage them in the name of distribution-grid stability and reliability.

As DERs and their aggregations grow more numerous and larger, the risk arises that large chunks of the system will receive dueling instructions. The paper’s authors call this “tier bypassing, which occurs when two or more system components have multiple structural relationships with conflicting control objectives.”

The second problem is simply complexity. DERs are still at a fairly nascent level of development, but they are set to explode in coming years, as rooftop panels, electric vehicles, home batteries, and smart meters become more common. Soon there will be all kinds of combinations and aggregations, at all levels, across every one of hundreds of LDAs.

Wholesale markets could go from having dozens of participants to having hundreds, or thousands, or hundreds of thousands.

That’s going to be a lot for a TSO to track — a thicket of new rules, new enforcement mechanisms, and sheer computational bulk. “Under this model,” Kristov, De Martini, and Taft write, “the TSO needs detailed information and visibility into all levels of the system, from the balancing authority area [i.e., the TSO level] down through the distribution system to the meters on end-use customers and distribution-connected devices.”

TSOs would have to track and manage all this information while working alongside, and attempting to coordinate with, dozens of DSOs maintaining local reliability.

Already some TSOs are complaining to FERC that state energy policies are distorting their wholesale markets. Imagine when those federally run markets involve thousands of DER participants, all of which are also subject to a variety of state energy policies and all of which are also constrained by DSO reliability requirements.

These are the kinds of thoughts that give FERC commissioners migraines. Balancing the interests of TSOs against the interests of dozens of DSOs will be an unending hassle.

Some economists like to think that if each energy source and service were priced properly, based on its real-time, location-specific value, the market would allocate electricity with perfect efficiency. Just get the right pricing algorithms in place and let ’er rip.

But there are reasons to doubt that distribution systems, filled with quirky and unpredictable human behaviors, can be adequately guided by the invisible hand alone. They need a more personal touch.

Kristov, De Martini, and Taft take no stand in the paper on whether the Grand Central model is possible, but when I asked De Martini directly, he was frank. “I don’t think the grand centralization model will work at scale,” he said, “as there are too many dynamic, random variables [in distribution systems] involving both machines and humans.”

“As I think about a TSO trying to have full awareness of what’s going on in a distribution system, bringing that together in a simultaneous optimization with the transmission grid, it just doesn’t make sense,” Kristov told me. “It seems needlessly complex. But if you don’t have that, then you need the DSO to step up to some higher-level responsibilities.”

Which brings us to the alternative to Grand Central.

A new, bottom-up architecture for the grid

The alternative grid architecture that the study’s authors propose solves these problems in an elegant way. It is called … hang on to your hats … a “decentralized, layered-decomposition optimization structure.” Whee!

Let’s translate that into English. (Side note: Layered or “laminar” structure is a familiar concept in telecoms and software architecture. It is somewhat newer to power systems.)

In the Grand Central model, the TSO optimizes everything in one place, not only power plants at the transmission level, but thousands of DERs and aggregations at the distribution level, in service of wholesale markets and transmission system reliability, while having sufficient real-time visibility into the distribution system to avoid conflicts with local reliability needs.

In Kristov, De Martini, and Taft’s proposed model — which I’m going to call LDO, for layered decentralized optimization, because I don’t want to type all those words again — each layer, the transmission layer and the distribution layer, would be responsible for its own optimization and its own reliability.

Remember tier bypassing? The LDO model would prevent that by effectively sealing the layers off from one another, except at their electrical interface points. The only point of communication and coordination between the transmission layer and the distribution layer beneath it would be at the TD interface (the substations). Everything below the TD interface would be managed and optimized by the DSO.

Responsibility “decomposes” to the layer beneath — that’s what “layered-decomposition” refers to.

The DSO would balance supply and demand within a local distribution area (LDA) using, to the extent possible, local DERs. It would then aggregate all remaining supply or demand into a single bid to wholesale markets (either a purchase or a power offer).

This would radically simplify things for a TSO.

It would not need to keep track of, manage, and dispatch tens of thousands of DERs, DER aggregations, and microgrids across the LDAs in its region. The DSOs would handle all that.

Each DSO would present to the TSO as a single unit at each TD interface. All the TSO would need to do is accept one aggregate wholesale market bid from each TD interface, of which there would be dozens or hundreds (rather than tens of thousands). That would maintain the simplicity and manageability of wholesale markets.

Just as responsibility for optimization would decompose downward, so too would responsibility for reliability.

The TSO would be responsible only for the reliability of the transmission system, up to the point of TD interface. Beyond that, each DSO would be responsible for reliability within its own LDA.

Every grid architecture must have a “coordination framework” that assigns basic roles and responsibilities to various components of the system. The LDO architecture is a “maximum DSO” or “total DSO” model, in that it assigns substantial new roles and responsibilities to DSOs, well beyond those assigned to them by the current system. (We’ll talk more about that in a moment.)

An architecture that scales all the way down

There are many advantages to the LDO architecture, which we’ll get into below, but one worth highlighting is scalability. LDO serves as a way of managing complexity up (or down) to any scale.

The electricity system need not have only two layers; it can have many.

Recall that in the LDO model, the transmission layer interacts with the distribution layer only at a limited number of TD interfaces. The only interaction a distribution system has with the transmission system above it is at that single point.

But there could be another layer beneath that first distribution layer. And it could communicate with that first distribution layer the same way the first distribution layer communicates with the transmission layer, i.e., through a single interface. Responsibilities would decompose downward again — the second layer would be responsible for its own optimization and reliability.

And there could be a third layer below that, and a fourth, ad infinitum.

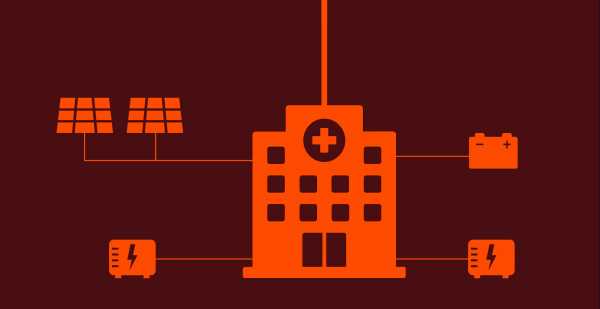

For instance, imagine a local microgrid that links together dozens of buildings, solar panels, combined heat-and-power (CHP) units, batteries, EV charging stations, and perhaps even a few smaller microgrids into a single network (a university campus, say). That network can island off from the larger grid and run on its own, at least for a limited time, if there is a blackout.

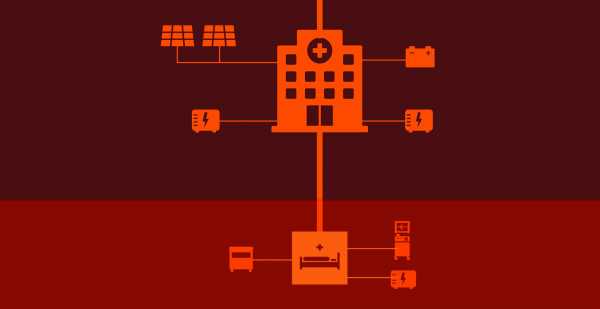

That microgrid is another layer. Rather than managing dozens of DERs, the DSO now manages the microgrid as a single aggregated asset. As for the microgrid, its only interaction with the larger distribution layer above it is through a single interface. It is responsible for its own optimization and reliability and can island if necessary.

Now, imagine the big microgrid contains several smaller microgrids within it. Each of them connects, say, three buildings, some solar panels, and some batteries.

Same deal: There’s a single point of contact between the big microgrid and each small microgrid (thus simplifying things for the big microgrid). Beneath those points, responsibility decomposes again, to the small-microgrid level.

Now imagine one of the small microgrids contains a building (say, a hospital) that is itself a microgrid — it has solar panels on the roof, diesel generators in the basement, some batteries, and a smart inverter that allows it to island off from the small microgrid in emergencies.

Same deal: One point of contact with the microgrid above it; responsibility decomposes down.

Now, imagine the hospital has an emergency wing that is itself a microgrid (nanogrid? teeny-weenygrid?), with a smart inverter and one diesel generator, just enough to power a couple of respirators and monitors.

Same deal: single point of contact; responsibility decomposes.

Because responsibility devolves downward, no single entity gets stuck tracking and dispatching an unwieldy number of DERs. And there is no tier bypassing. Each layer is responsible for itself and interacts with the level above it through a single point of contact.

This helps tame the problem of rapidly increasing complexity in the electricity sector. Whereas in the Grand Central model, the TSO will have to single-handedly keep track of all the blooming and buzzing DERs beneath it — which, let’s be serious, will eventually overwhelm it — in the LDO model, each layer is its own, tractable domain.

Layered grid architecture faces substantial real-world obstacles

There are all sorts of reasons why the LDO vision will be slow to come to fruition, if it ever does. It’s a major departure from the centralized, top-down architecture that dominated the past 100 years, and as such it requires a whole raft of legal, regulatory, and economic changes, ranging over numerous jurisdictions.

Among other things, local distribution utilities would need to be beefed up considerably to become maximum DSOs. In the LDO architecture, Kristov, De Martini, and Taft write, DSOs “would have to provide an open-access distribution-level market that would aggregate DER offers to the wholesale market, obtain services from qualified DER to support distribution system operations, and enable peer-to-peer transactions within a given LDA and potentially even across LDAs.”

That’s a lot of new stuff to figure out (though many technical questions are addressed by papers from the Pacific Northwest National Laboratory and others). Even where progress moves in the LDO direction, it will be shaped to local conditions and likely small-c conservative.

Still, these are volatile times in the sector, with utilities and regulators alike wondering nervously how to get ahead of the curve. If a bold utility did a maximum-DSO demonstration, perhaps it could spark a wave of similar reforms.

Rather than trying to predict the possible uptake of the LDO model, let’s talk about a few more advantages.

The LDO architecture would put more power in local hands

Aside from scalability, the most notable feature of LDO architecture is that it flips a top-down system. Responsibility for electrical power — and with it, social and political power — decomposes downward, to the local level, rather than concentrating at the top.

Starting at the very lowest level, often behind the customer meter, each level will have a smart controller maximizing its efficiency and self-reliance. Only to the extent that it is unable to provide for itself will it seek power from the next level up.

At that level too, a smart controller will be optimizing all its varied resources, seeking efficiency and self-sufficiency. Only to the extent that it is unable to provide for itself will it seek power from the next level up. And so on.

This architecture puts local DERs, at the bottom edge of the grid, first in the priority stack, ensuring that they are optimized and fully utilized before any LDA requests power from the transmission system. Big, centralized power plants become the last resort, not the first.

Now let’s pause here to forestall a couple of possible objections.

First, nothing about the LDO architecture implies that it is bad for a level to request power from the level above it, or bad for LDAs to request power from the transmission grid. Most levels and most LDAs, especially in these early days of DERs, are far from fully self-sufficient and will be for some time. They will need transmission-grid power. Many always will.

And that’s fine. The limits of energy self-sufficiency are not moral failings, they are a matter of local climate, population density, and engineering. Different communities will value self-sufficiency differently. Some will seek independence to every extent possible, perhaps even becoming net producers that sell into wholesale power markets. Some will be content to get most of their power from the transmission grid. All will have their choices shaped by local conditions and limitations.

The whole point of big power plants and the continent-spanning (or at least partially continent-spanning) transmission grid is to provide everyone with backup power, so that we are not limited by local conditions. It’s a beautiful thing; no one need ever apologize for utilizing it.

Second and relatedly, there is often a false dichotomy drawn in the energy world, with advocates for big power plants and the big grid (the “hard path” in energy development) on one side and advocates for self-sufficient local grids run on DERs (the “soft path”) on the other.

The LDO architecture neatly moots that debate. Each layer optimizes, then draws on the layer above, all the way up to the transmission layer. Local DERs are systematically maximized, even as everyone enjoys the benefits of the power plant/transmission grid backup.

What flips is the priority, and with it, the power. Foregrounding local resources would at long last make cities and regions (their vehicle fleets, their building and zoning codes, their infrastructure, their vulnerabilities) full partners in optimizing and decarbonizing energy.

“A lot of things we consider electrification and decarbonization are going to play out through local planning,” Kristov says, “whether it’s rethinking mobility in urban areas or retrofitting buildings, these are local initiatives that will create local jobs. So you start having local economic development as a consequence of this decentralization.”

The LDO architecture would structure local needs, local aspirations, and local resilience directly into the decarbonization effort.

Unleashing DERs would spark enormous innovation

It would also spark a surge of energy innovation. Right now, thanks to outdated regulatory models, utilities are often hostile toward DERs, which are increasingly able to substitute for grid infrastructure. Anything that reduces utilities’ need to invest in more infrastructure threatens their financial returns. Consequently, they often show exactly as much support for DERs as is mandated by legislators, and no more.

In the LDO model, DSOs wouldn’t make money off infrastructure investments and they wouldn’t own DERs. They would make money by providing services. Each DSO would run what is effectively a distribution-level market within its own LDA. DERs would bid their energy and services in, local supply and demand would be matched to the extent possible, and the DSO would submit the remainder as a single wholesale-market purchase (if there’s residual demand) or bid (if there’s residual supply) at the TD interface.

The upshot is that each DER or aggregation, each layer, would have financial incentive to optimize its own resources and maximize its own self-sufficiency — to produce as much power as possible and consume as little as possible. That would create enormous demand-side pull for DER innovation.

And remember, DER innovation isn’t like power-sector innovation of old. Fossil fuel and nuclear power plants only come in one increment: big. It takes a long time to build, iterate, and improve them, and the capital barriers to entry in that market are high.

DERs tend to be smaller and more connected to information and communication technology (ICT), things like electric cars, smart car chargers, new kinds of batteries, or just software to run all that stuff. The capital barriers are lower; the time it takes to iterate is much shorter; learning and improvements spread much faster.

The mammals are coming for the dinosaurs.

With a new grid architecture, DERs can turn the focus to local resilience and rapid decarbonization

In 2015, Kristov published a speculative piece in Public Utilities Fortnightly called “The Future History of Tomorrow’s Energy Network.” It is written as a look back from 2050 at the energy system that developed since 2015, describing its evolution into the LDO model.

What came between 2020 and 2030, he writes, was “the realization that DERs would dominate the future rather than simply lurk at the margin.”

That is the key question facing the electricity sector: whether DERs are at the front end of a massive and sustained expansion. If they are — and all signs point to go — then it’s worth thinking ahead about the kind of electricity system that can manage and maximize them.

That’s what the LDO architecture is meant to do: manage complexity, speed decarbonization, and enhance local resilience. It moves responsibility for DERs into the hands of those closest to them and builds the grid from the bottom up, making every community a partner in the great fight against climate change.

Sourse: vox.com